The title describes my equation.

Hi @vad13irt

So, to generate the embeddings, we first took all of the images which had their corresponding labels and put them into ( for ex. resnet18 ) model, and then we extracted the features of the images from a certain layer of the model. This blog is also quite good if you want to understand the whole process.

Let me know if you have any questions. Enjoy Blitz

Shubhamai

What the certain layer  ?

?

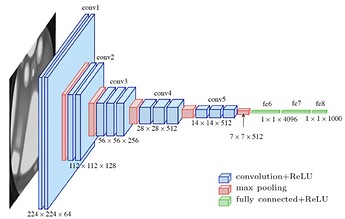

The exact layer depends on the model you are using and what layer you want to extract the features from. Commonly, for transfer learning, it is the layer just before the fully connected layer. So for ex. in vgg16, it’s the last max-pooling layer. Same with resnet18, the features are extracted from the layer before the fully connected layer.

Here’s another blog you can read if you want to learn more about vgg16 feature extraction. I hope this helps

Shubhamai