The Global Chess Challenge 2025 has come to a close. Over the course of the challenge, more than 400 participants across 58 teams took part, collectively producing 3,174 submissions and steadily improving performance under strict evaluation constraints.

Challenge Summary & Round 2 Highlights

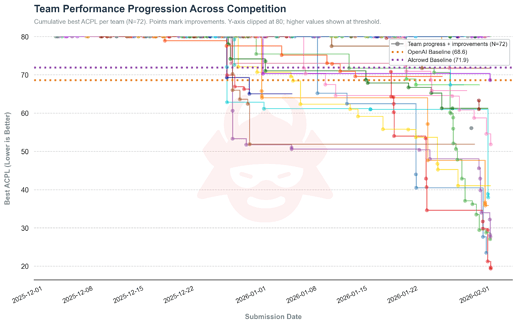

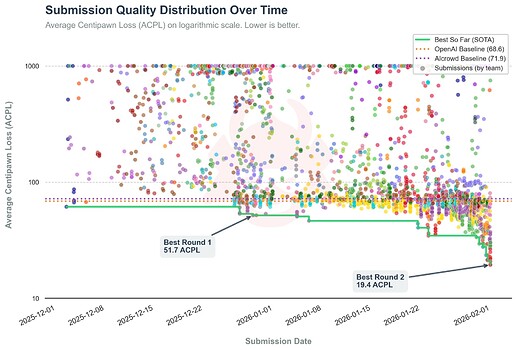

Submission quality evolved throughout the competition. Early entries focused on reliability and output correctness, while later submissions became more stable, consistent, and increasingly competitive.

Performance improved substantially over the competition window. The best ACPL decreased from approximately 70.37 (Win Rate: 0%) at the start of Round 1 to 19.4 (Win Rate: 97%) by the end of Round 2, and 18 teams surpassed the baseline models.

Submission trends showed clear progression over time: early entries included formatting or legality failures, mid-competition submissions clustered near baseline performance (72–200 ACPL), and later entries increasingly moved into competitive (50–72 ACPL) and elite tiers (<30 ACPL).

On the Round 2 evaluation suite, the top submission achieved 19.4 ACPL and a 97% win rate. The evaluation suite baselines for Round 2 scored:

- Organizer baseline (4B SFT): 71.9 ACPL (0% win rate)

- OpenAI GPT-5.2-low baseline: 68.6 ACPL (1% win rate)

The top submissions substantially outperformed both reference baselines.

Final Tournament

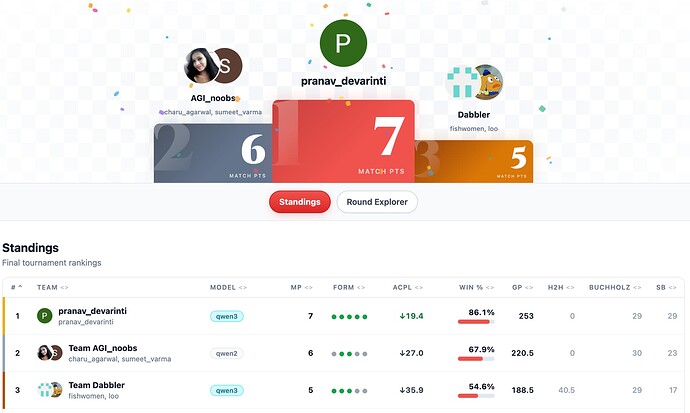

To determine final rankings, qualifying agents competed in a Swiss-system head-to-head tournament where results were determined by game outcomes aggregated into match points. Details on the structure of the Swiss-style tournament are available here.

The final standings from this tournament determine the winners of the challenge.

Winners

The winners of this iteration of the challenge based on the final Swiss-system tournament standings are:

| Rank | Team | Match Points | ACPL ↓ | Estimated Elo | Evaluation Win Rate |

|---|---|---|---|---|---|

|

|

@pranav_devarinti | 7 | 19.4 | 2063 | 86.1% |

|

|

AGI_noobs (@sumeet_varma, @charu_agarwal) | 6 | 27.0 | 1969 | 67.9% |

|

|

Dabbler( @loo @fishwomen) | 5 | 35.9 | 1797 | 54.6% |

You can find the final standings here.

Check out the per-round results, per-game records, and PGNs over here.

Thank you to everyone who participated and contributed submissions throughout the challenge. We appreciate the time, experimentation, and persistence that went into improving agents over the course of the competition.

Next Steps

We will be reaching out to the winning teams shortly with details regarding prize distribution. We will also contact several top-performing teams to participate in a short interview series highlighting their approaches and key lessons from the challenge, and we will share the takeaways with the community. Stay tuned!