OK, so after a bit of struggle I’ve just managed to successfully submit a first model that goes a bit beyond the getting the started notebooks. For the benefit of those that follow I thought I’d share back observations, tips and tricks.

# 1 Start with Colab

I’m new to AI Crowd, but experienced in Kaggle. This competition reminds me a bit of the code based kaggle competitions which, if you are a beginner to data science, can seem a bit confusing and daunting to get started with.

If that’s how you feel then I’d suggest you start with the colab notebooks. You can make your first submission by just running and submitting the code in the notebook with no changes other than to add your API key following the instructions at the beginning of the notebook.

From there you can make a few simple changes to the fit_model routine to gain confidence. My mistake was to immediately jump in with multiple complex changes and data manipulation. No wonder I then kept tripping over my own coding errors for the next 8 hours!

# 2 If you start with Python don’t give up after the first submission!

[EDIT: This issue has now be fixed . Thanks admins  ]

]

My first preference is R… so my first submission was essentially the R getting started colab notebook. But as more people joined and made submissions I couldn’t understand why their RMSE was in the 1000s compared to my RMSE of 500 ish.

When I looked at the Python getting started notebook the answer became clear. The R and Python getting started notebooks are not consistent (which I think is a bit of a shame and an oversight which would be good for the organisers to fix). Basically the R notebook submits the observed mean from the training data (something like 114 per policy) whereas the Python notebook submits a prediction of 1000 per policy.

# 3 Using additional libraries in R colab

I struggled to correctly load additional libraries. In the end I worked out a solution by looking at the zip code submission method and the python colab notebook.

So, for R users wishing to use additional libraries like data.table and xgboost then as per the instructions add the install.packages(“data.table”) to the install_packages function. The part that is unclear is where do you add the call to load the libraries?

I’ve found that I had to add it to the beginning fit_model function call. For good measure I also added them to the predict_expected_claim and preprocess_X_data which I suspect is unnecessary but does no harm. I’ll come back and update when I confirm.

[EDIT: I’ve tried putting the library calls in the fit model function only but that caused submission errors. So until we get guidance from admins I’m sticking with adding them to all the key functions as above.]

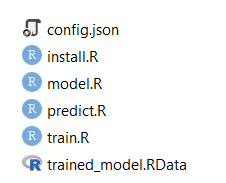

# 4 Using zip file submission approach

I’ve not succeeded with this approach yet. This would be my preferred method and will be my focus now. Again I’ll come back and update when I get it working.

Good luck, everyone!