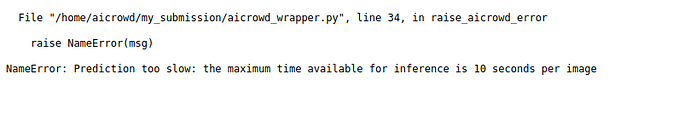

Recently i got this error when submitting LB, before same model (only change weights) successfully submitted and scored on LB, we need to confirm the maximum time available for inference is 10 seconds per image

what ?

a new change of rules ?

NameError: Prediction too slow: the maximum time available for inference is 10 seconds per image

I don’t understand how the 10s is calculated. image process using segformer take 1 to 5 seconds I think even on a T4.