It is currently known that the biggest difficulty for competitors is the submissions.

Although we have good validation on local, even GCP

But there are still many people who have encountered difficulties.

This is nothing about Reinforcement Learning, but I feel that only knowing that the solution is not a good competition.

Here are some of the results I tested in the past few days that I consumed my quota.

The image file currently used by Aicrowd:

nvidia/cuda:9.0-cudnn7-runtime-ubuntu16.04

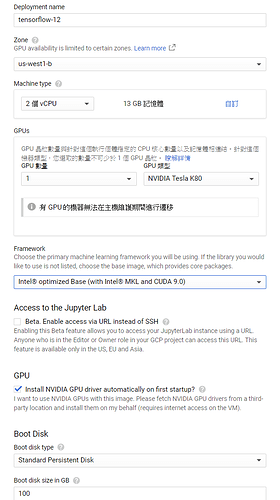

So the way to test similar environments can be set in GCP Compute.:

My language is Chinese, but it won’t matter. I should pay attention to Cuda 9.0 instead of the default cuda10. Although used in docker does not affect the results, some contestants may need to export the configuration files of the original environment in non-docker.

(GPU should be officially approved)

This will be more similar to Aicrowd’s execution environment, and when evaluating,

docker run \

--env OTC_EVALUATION_ENABLED=true \

--network=host \

-it obstacle_tower_challenge:latest ./run.sh

Should add attributes

--runtime=nvidia

This allows you to use the nvidia driver in docker, will automatically apply cuda in docker.

In addition, GCP has pre-configured the nvidia runtime docker for you. If you are configuring in a non-GCP environment, you should refer to this.

Another special reminder is that

The newline symbol for the Dos file is ^J^M

The newline symbol for Unix files is ^J

So in some cases you will encounter bash problems, because it is the relationship of the Windows environment configuration file, you should use Vim and other similar editors to change the encoding.

In addition, can you ask the administrator to give me more quota?