In a submission that I made @jyotish mentioned that submissions were being trained on AWS spot instances.

This means that the instance can shut down anytime.

Checkpoints of the models are made periodically, and training is then restored.

The problem with that is that many important training variables/data are not stored in checkpoints (e.g. the data in a replay buffer) and this leads to unstable training which vary depending your luck of not being interrupted.

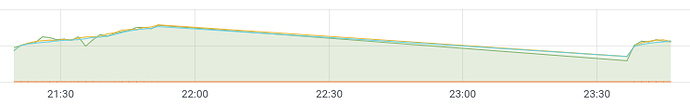

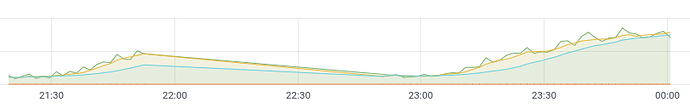

Here is a couple of plots of the training mean return of one of my submissions. The big stretched lines are due to faraway datapoints (agent being interrupted and then restored.)

What does the Aicrowd team recommends here? Storing the replay buffer and any important variables whenever checkpointing?