Hi everyone!

My name is Stephan Sturges, and I develop (amongst other things) an AI system for UAV called OpenLander that does something very similar to what we are trying to build here in this challenge!

I’m excited about the challenge and have been looking at the data, and I have a few remarks off the top that I thought might be helpful to the organisers, and maybe are some things that can be integrated into the challenge since this is still very early.

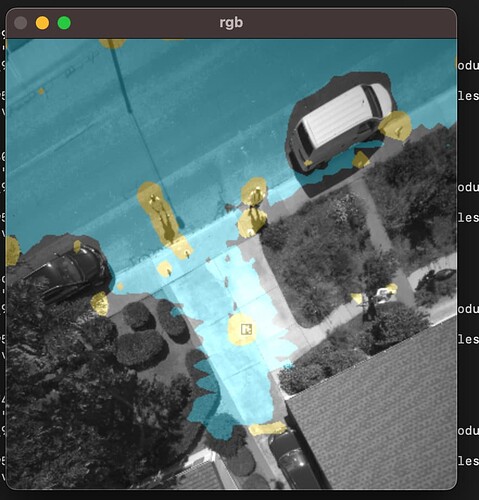

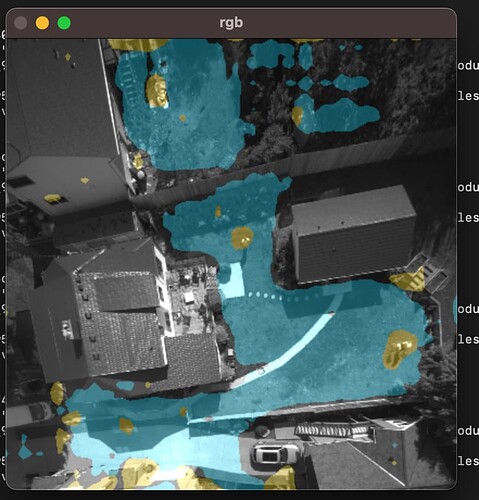

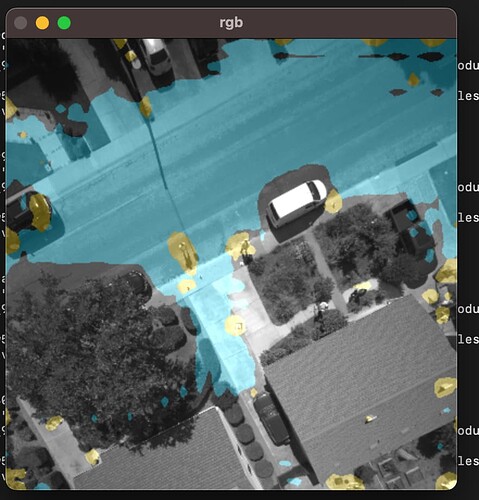

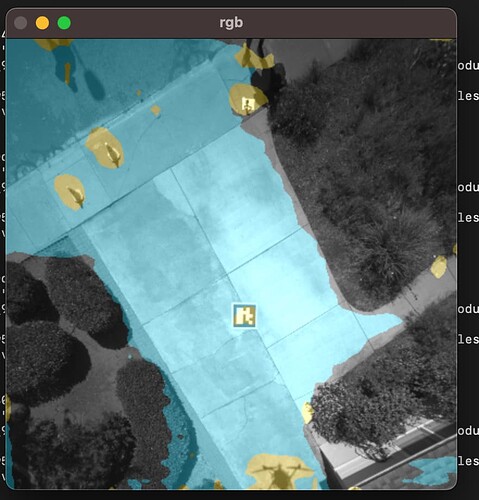

Basically it’s this →

These are the labels we are looking for:

[WATER, ASPHALT, GRASS, HUMAN, ANIMAL, HIGH_VEGETATION, GROUND_VEHICLE, FAÇADE, WIRE, GARDEN_FURNITURE, CONCRETE, ROOF, GRAVEL, SOIL, PRIMEAIR_PATTERN, SNOW].

Based on the quality and quantity of the data there is no way we will be able to get to accurate segmentation on most of these categories from the dataset supplied here, especially because our input is mono instead of RGB … even with quite a large segmentation network this task is not “solvable” to any realistic degree of accuracy, and if we do use a very large network it will lead to a system that won’t run in real-time on embedded hardware, which is what I assume Amazon Prime Air wants in the end!

I would highly recommend a couple of things:

- group some categories

- go with a “default negative” approach: don’t try to teach the neural network what every single thing in each image is, consider everything to be an obstacle by default and teach the neural network to learn what is not an obstacle

- After point (2), layer-on things that you need to detect as a priority such as humans, wires (because they are potentially higher up), the “prime air” pattern, perhaps water, I would definitely add “cars” and “trucks” etc…

I can tell you from experience that trying to learn “concrete” for the real world is impossible and pointless: there are far too many variations in real life and collecting data in the way that we see here ( with real drones and manual annotations) it will take you 10 years to solve that problem on it’s own.

So basically I would recommend making some changes to the categories and grouping right from the start in order to build a challenge that is more “solvable” and has better real-world application potential ![]()