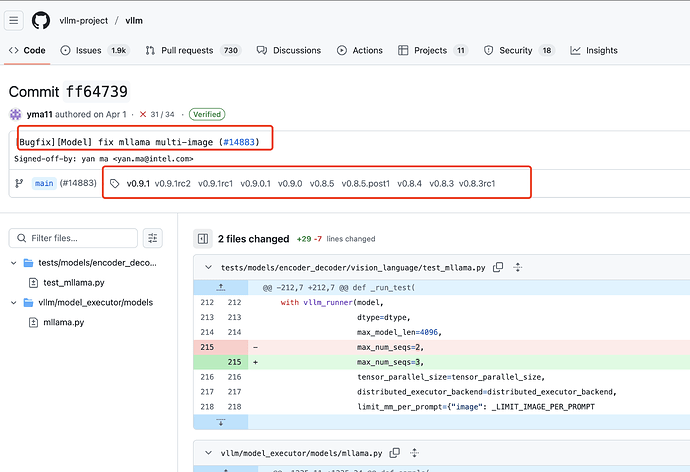

We would like to kindly inquire about the requirement for the vLLM version to be lower than 0.8. From the vLLM repo that multi-image reasoning for Llama 3.2 Vision is only supported by vLLM versions 0.8.3 and above, which is crucial for enabling effective reasoning with MM-RAG hybrid inputs.

Given the importance of this functionality for our project’s objectives, we wonder if there might be flexibility in adjusting the version constraint or if there are specific considerations behind this requirement that we could address. Your clarification on this matter would greatly assist our development process.