Hello,

Thanks for providing such an interesting problem. I got a few questions after doing some analysis:

-

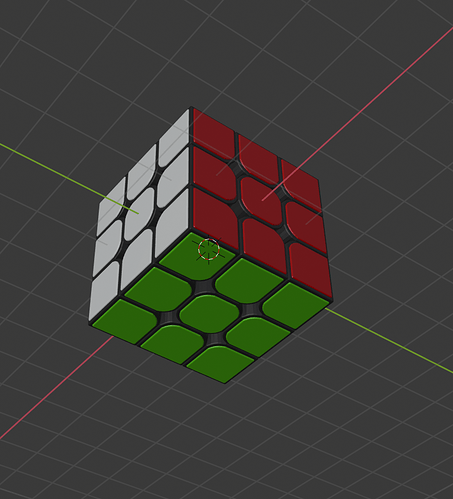

The Rubik’s cube is weird in terms of coloring. It has two same color blues adjacent to a white face. Correct me if I am wrong.

-

(Important) provided the range of x, y, z between 0-360, there will be at least two sets of angles that can lead to the same orientation of Rubik’s cube (indirectly the simulated image). This can be corrected by limiting one angle (pitch, y) in general to lie between (0-180). Even by correcting so, there will be a gimbal lock problem (https://en.wikipedia.org/wiki/Gimbal_lock#In_applied_mathematics).

Assuming I throw have an “oracle” supervision to do prediction, half of the time my answer will be wrong due to reason-2. Let me know if it is not the case.

Thanks

@bobthebuilder: That is indeed a valid concern raised especially in context of Gimbal lock.

We will post a fix to the data, and an update soon. Thanks for pointing this out, and for your patience.

1 Like

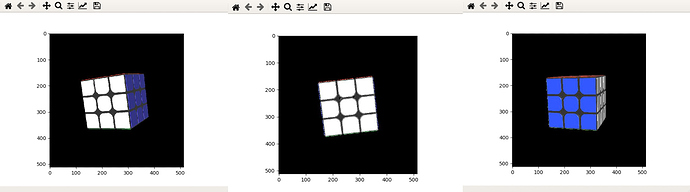

@mohanty: Thanks for getting back. I have added 3 images to support my first query too. I will add evidence for 2-sets of angles (query-2) soon.

Cube image numbers:

left: 028917.jpg, center:028454.jpg, right:008041.jpg

Left cube: 330 < yRot < 360

Center cube: yRot ~ 0

Right cube: 60 < yRot < 90

All other angles are kept close to zero.

I hope this helps.

@bobthebuilder: I am not sure, if I correctly understand the images you have attached. But cant the L-R positioning of the Blue and White be affected if you are looking at it “from the top” or “from the bottom” of the cube ? Does that explain the images you mention here ?

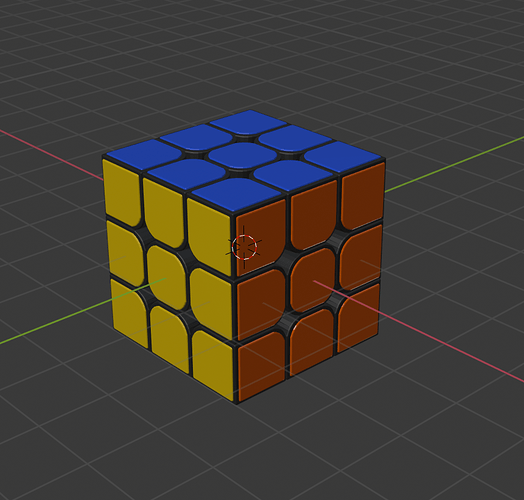

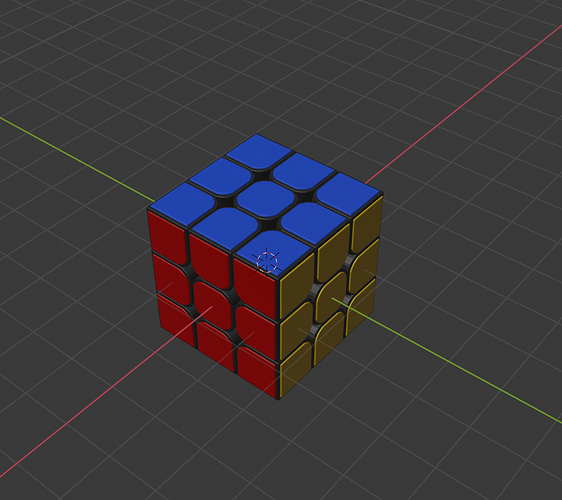

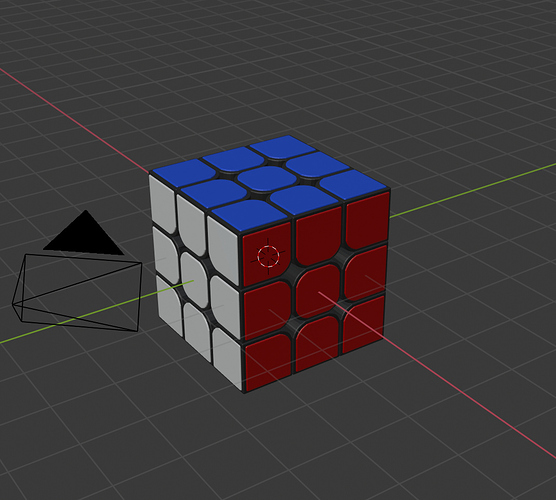

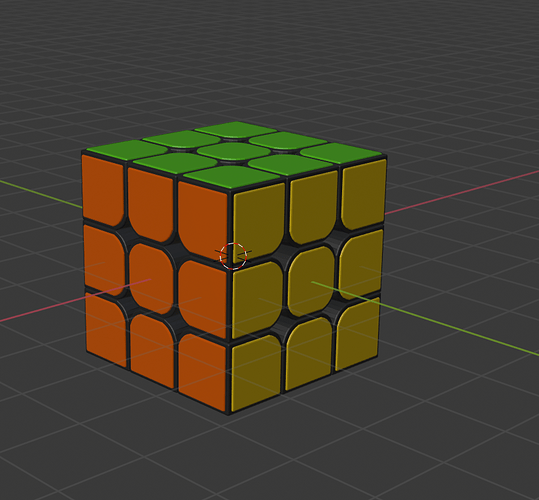

Also, for generating the data, we use a simple 3d model of a Rubik’s cube. And here are some screenshots by manipulating the camera angles of the rubiks cube to help you get a proper view of the cube from all angles.

@mohanty: I agree with the effect of right-left based on viewpoint. But, in the previous post, I kept all other angles constant (close to zero) expect “Y”. So in that case, blue shouldn’t be visible in both right and left images.

(minor point) I guess the cube used is different. It doesn’t have a cyan side as provided in training data. There was no yellow at all.

@bobthebuilder: No, we use the exact same model. In the dataset they are just rendered in openGL, and in the screenshots above, I quickly rendered it in Blender.

And also, the cube doesnt have a “cyan” face, its probably the blue face looking that way while taking into account the lighting and the material.

In any case, we are currently preparing a new version of the dataset. where we also simplify the problem a bit. So please expect an update from us within the next few hours.

2 Likes

@mohanty: Thanks a lot for update.

(suggestion) You can keep the dataset same but change the error metric in terms of Rotation-matrix or quaternion. You can re-eval all past-submissions too.

@bobthebuilder: I have posted an update on this problem here : [Important] Dataset + Problem Update

Thanks for all your inputs.

We also took the time to simplify the problem a bit more.

We could have temporarily solved the problem by ensuring that Gimbal Locks are not represented in the training and test sets. But on some initial exploration, we realised that most models would still struggle to learn properly in that loss landscape. Our whole goal to use Euler Angles was to make the notion of orientation intuitive for the participants. And trying to bring in Quaternions now would solve the problems here, but also increase the complexity of the task from a ML pov. So we would leave that for a future version of AIcrowd Blitz. In the meantime, we come up with a simplified problem statement, where the goal is to predict the orientation around an arbitrary axis that we used to generate the training and test set.

Cheers,

Mohanty

1 Like