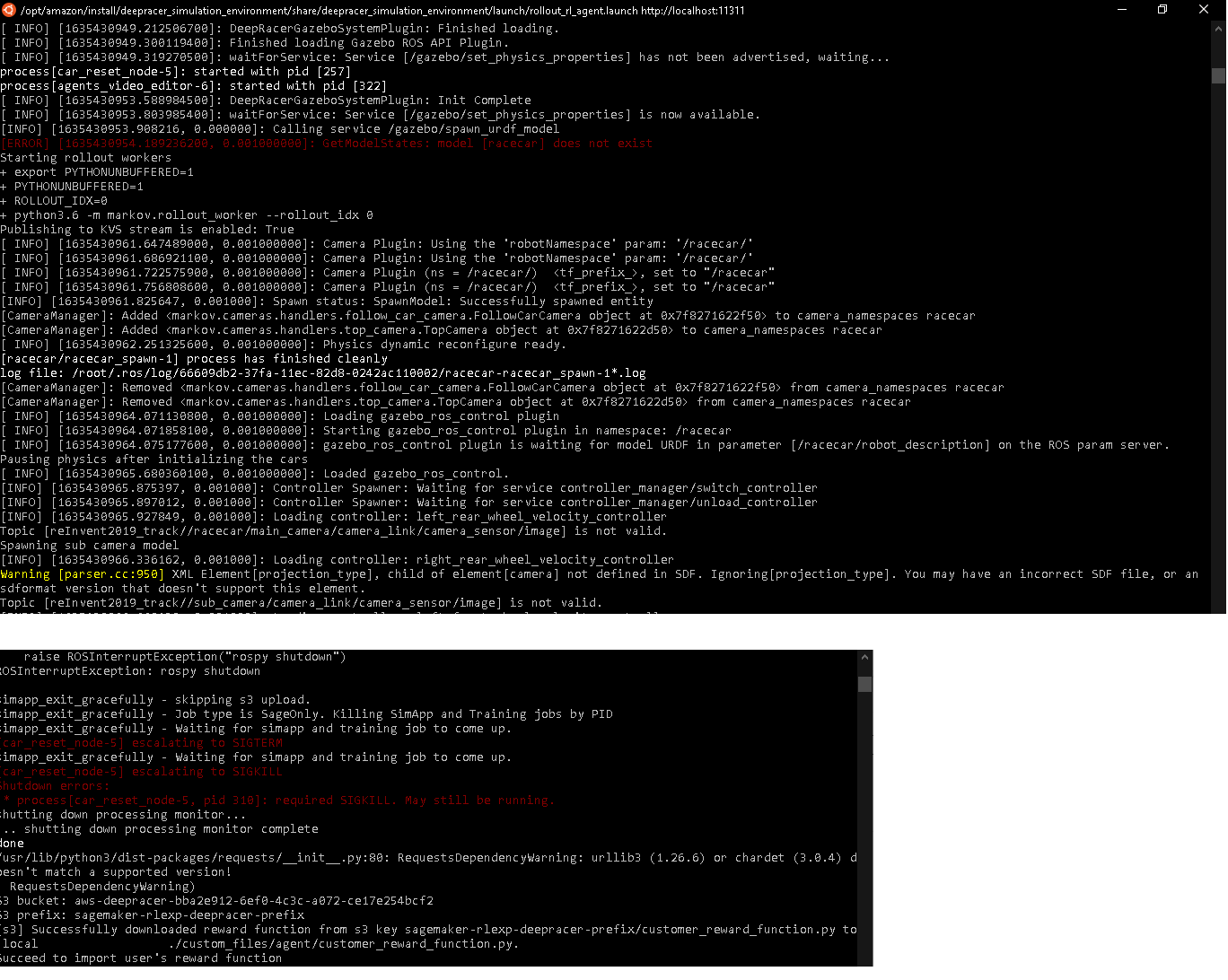

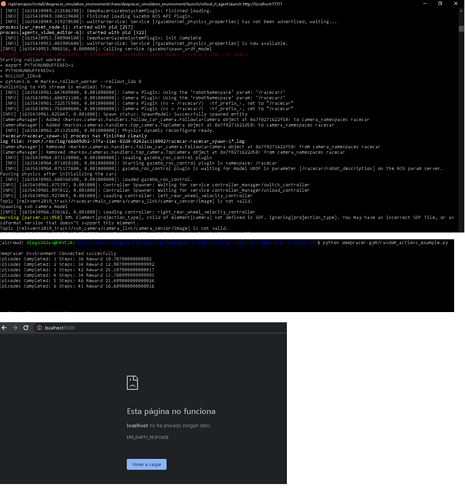

Hi all! I’m a Deepracer beginner, I’m trying to complete my first submission but got some questions. I followed the instructions but I don’t know if I make a good connection to the deepracer gym environment. Today, the first time I ran the start_deepracer_docker.sh file I had some errors and warnings and the message “==Waiting for gym client==” didn’t appear. I don’t know what the errors mean (see image 1). However, the second time I ran, the message appeared, but with one error: model[racecar] doesn’t exist. Then I could run the randon_action_example.py file with no problem and received the reward points lines (see image 2). And here I was stuck. I don’t know what to do next. How do I customize my agent and actions? I tried to open the container in the browser with localhost:5000 to see if there is a simulator interface but the “Page does not work”… Hope you can help me, guys. Best!!!

Hi @diego26dav

The deepracer gym env should be treated like any other openai gym environment, you can use any standard RL library or your own code to train an agent that will maximize the reward. For this round the reward is for staying near the center of the lane while driving the car.