This server files has issue:

evaluation_utils/mcp_game_servers/super_mario/game/super_mario_env.py

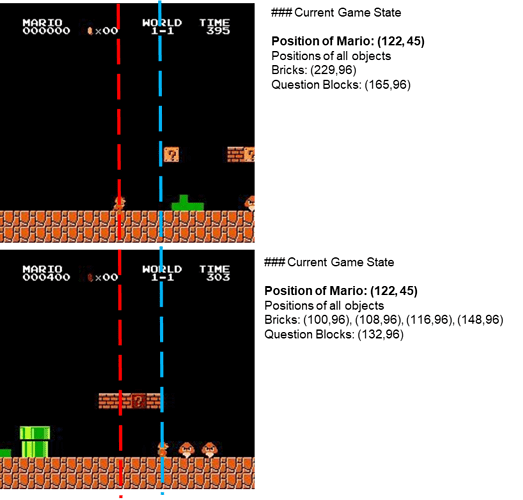

The issue is that get_game_info() needs to return the actual game state, but it needs access to the latest info dict from the gym environment.

Here’s the proper fix:

File: evaluation_utils/mcp_game_servers/super_mario/game/super_mario_env.py

Location 1: In the configure() method, add initialization (around line 237):

self.jump_level = 0

self.mario_loc_history = []

self.latest_info = {} # ADD THIS LINE

Location 2: In the step() method, store the info dict before returning (around line 405-407):

obs = SuperMarioObs(

state={"image": state},

image = self.to_pil_image(state),

info=info,

reward={"distance": info['x_pos'], "done": done}

)

self.mario_loc_history.append((info['x_pos'], info['y_pos']-34))

self.latest_info = info # ADD THIS LINE

return obs, info['x_pos'], done, trunc, info

Location 3: In the _start_new_episode() method, store initial info (around line 298-299):

self.env.render()

self.jump_level = 0

self.mario_loc_history = []

self.latest_info = last_info # ADD THIS LINE

return SuperMarioObs(

Location 4: Replace the get_game_info() method (lines 308-313):

Replace:

def get_game_info(self) -> dict:

return {

"past_mario_action": f"Jump Level {self.jump_level}",

"mario_loc_history": f"{self.mario_loc_history[-3:]}"

}

With:

def get_game_info(self) -> dict:

# Return the actual game state from gym environment

# This includes: coins, flag_get, life, score, stage, status, time, world, x_pos, y_pos

return self.latest_info if hasattr(self, 'latest_info') else {}

This way, the agent will receive the actual x_pos, y_pos, and other game state info through the game_info dict