Dear participants,

Phase 2 of the Meta CRAG-MM Challenge 2025 is now live. Please find below the final set of updates based on the v0.1.2 release of the dataset and evaluation.

Key Updates for Phase 2

Key Updates for Phase 2

-

v0.1.2 Released: Includes updated datasets, mock APIs, and schema changes.

-

Submissions now open, based on v0.1.2.

-

Validation and public test sets remain accessible after the challenge.

-

Leaderboard now reflects performance on the private test set.

-

Submission cap: Up to 10 submissions per task per week.

-

Egocentric images are downsampled to 960×1280 for evaluation to reflect real-world constraints. Standard image resolution remains unchanged.

-

Submission deadline extended to 14 June 2025; team freeze deadline extended to 1 June 2025.

Please note: The rules have been updated to reflect these changes. To continue submitting in this round, you must visit the challenge page, click “Participate”, and accept the updated rules.

Please note: The rules have been updated to reflect these changes. To continue submitting in this round, you must visit the challenge page, click “Participate”, and accept the updated rules.

What’s New in v0.1.2

What’s New in v0.1.2

-

Cleaner data: Removed low-quality or ambiguous image–QA pairs.

-

Expanded volume: More samples added across all three tasks.

-

Bug fixes: Minor corrections (e.g. consistent domain values).

-

Improved web search: Upgraded to BGE text encoder.

Schema Update & Loader Compatibility

Schema Update & Loader Compatibility

-

Schema upgraded: Single-turn and multi-turn datasets now follow a dict-of-columns format. No fields have been removed or renamed.

- If you’re using

crag_batch_iterator.py, no changes needed — it auto-detects schema layout.

- If you stream datasets directly from Hugging Face, minor updates are required (usually 2–3 lines). See the [schema update post] for examples and a minimal helper function.

- The iterator has been patched to:

- Handle both list and dict layouts

- Auto-download missing blobs and resize to 960×1280

- Provide clearer error messages for missing fields

-

Updated dependencies required: Ensure your environment uses:

torch > 2.6vllm < 0.8cragmm-search-pipeline >= 0.5.0

Click here for more details

Please pull the latest changes, update your environment, and continue training. If you encounter any issues, run the helper function from the post and share the output — we’re here to help.

We look forward to your submissions in this new round.

Hi,

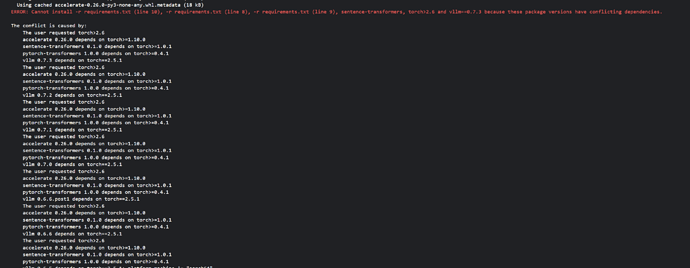

I tried to install using the requirements given from the gitlab files but got this error. Also it would be helpful to update local evaluation.py to use both validation and public_test splits. Thank you.

Summary

This text will be hidden

1 Like

same error here. Please update this.

Hello @harshavardhan3 @fersebasIn

Recent releases of vllm are currently affected by an undefined-symbol error (see the discussion in this issue). In our internal testing, the vllm 0.7.x series functions correctly.

That said, vllm 0.7.x requires torch 2.5.1, and this particular Torch build cannot load CLIP model weights due to the security advisory CVE-2025-32434. We are working on a more robust solution, but the following interim procedure will allow you to run local tests—including both the validation and public_test splits—without encountering these issues:

-

In requirements.txt, set

vllm==0.7.3

-

Remove any explicit version constraint for Torch in the same file.

-

Install dependencies:

pip install -r requirements.txt

-

Reinstall Torch with compatible versions:

pip install torch==2.6 torchvision==0.21.0

After completing these steps, local_evaluation.py should execute successfully on both data splits.

Please let us know if you encounter any further difficulties.

Thanks, we will try this solution.

Can I join competition in round2?

There is limit to new participation or open to new users?

Hi, as per the rules, you cannot join Round 2. Round 1 was open to all teams, but to enter Round 2, your team must have made at least one successful submission in Round 1

1 Like

We’re getting this error:

markdown

Copy

Edit

INFO 06-02 09:35:07 model_runner.py:1115] Loading model weights took 23.6553 GB

[rank0]: Traceback (most recent call last):

[rank0]: File “/workspace/fer/meta-comprehensive-rag-benchmark-starter-kit/local_evaluation.py”, line 576, in

[rank0]: main()

[rank0]: File “/workspace/fer/meta-comprehensive-rag-benchmark-starter-kit/local_evaluation.py”, line 544, in main

[rank0]: agent=UserAgent(search_pipeline=search_pipeline),

[rank0]: File “/workspace/fer/meta-comprehensive-rag-benchmark-starter-kit/agents/vainilla_pixtral_agent.py”, line 80, in init

[rank0]: self.initialize_models()

[rank0]: File “/workspace/fer/meta-comprehensive-rag-benchmark-starter-kit/agents/vainilla_pixtral_agent.py”, line 90, in initialize_models

[rank0]: self.llm = vllm.LLM(

…

[rank0]: File “/usr/local/lib/python3.10/dist-packages/vllm/model_executor/models/pixtral.py”, line 518, in forward

[rank0]: out = xops.memory_efficient_attention(q, k, v, attn_bias=mask)

[rank0]: File “/usr/local/lib/python3.10/dist-packages/xformers/ops/fmha/init.py”, line 306, in memory_efficient_attention

[rank0]: return _memory_efficient_attention(

[rank0]: File “/usr/local/lib/python3.10/dist-packages/xformers/ops/fmha/init.py”, line 467, in _memory_efficient_attention

[rank0]: return _memory_efficient_attention_forward(

[rank0]: File “/usr/local/lib/python3.10/dist-packages/xformers/ops/fmha/init.py”, line 486, in _memory_efficient_attention_forward

[rank0]: op = _dispatch_fw(inp, False)

[rank0]: File “/usr/local/lib/python3.10/dist-packages/xformers/ops/fmha/dispatch.py”, line 135, in _dispatch_fw

[rank0]: return _run_priority_list(

[rank0]: File “/usr/local/lib/python3.10/dist-packages/xformers/ops/fmha/dispatch.py”, line 76, in _run_priority_list

[rank0]: raise NotImplementedError(msg)

[rank0]: NotImplementedError: No operator found for memory_efficient_attention_forward with inputs:

[rank0]: query : shape=(1, 512, 16, 64) (torch.float16)

[rank0]: key : shape=(1, 512, 16, 64) (torch.float16)

[rank0]: value : shape=(1, 512, 16, 64) (torch.float16)

[rank0]: attn_bias : <class ‘xformers.ops.fmha.attn_bias.BlockDiagonalMask’>

[rank0]: p : 0.0

[rank0]: fa2F@v2.5.7-pt is not supported because:

[rank0]: xFormers wasn’t built with CUDA support

[rank0]: cutlassF is not supported because:

[rank0]: xFormers wasn’t built with CUDA support

[rank0]: operator wasn’t built - see python -m xformers.info for more info

[rank0]:[W602 09:35:09.687680317 ProcessGroupNCCL.cpp:1496] Warning: WARNING: destroy_process_group() was not called before program exit, which can leak resources. For more info, please see Distributed communication package - torch.distributed — PyTorch 2.7 documentation (function operator())

We’re using the Pixtral-12B model in English. Can anyone help us?

Does the leaderboard has bug now???

Please check this for more details: Are the leaderboard and submissions working well?

Very important!!! We are confident that our scores of task2 and task3 are on the contrary

!!!

Key Updates for Phase 2

Key Updates for Phase 2Please note: The rules have been updated to reflect these changes. To continue submitting in this round, you must visit the challenge page, click “Participate”, and accept the updated rules.

What’s New in v0.1.2

What’s New in v0.1.2 Schema Update & Loader Compatibility

Schema Update & Loader Compatibility