Dear Participants,

We are delighted to inform you that Phase 2 is launching! Before the actual launch, please let me introduce what’s different in Phase 2.

- Batch inference interface. Phase 2 will adopt an improved inference interface which supports batch inference. Moreover, with the support of VLLM (we will provide a baseline implementation), inference was significantly accelerated, allowing for a more generous submission quota.

- Eligibility of Phase 2. Due to the acceleration brought by batch inference and VLLM, we are glad to announce that all teams who beat the baseline in Phase 2 will proceed to Phase 2, regardless of your ranking.

- More GPUs available. In Phase 2, you will have access to 4 NVIDIA T4 GPUs instead of 2 in Phase 1. You will also have a maximum repo size of 200GB.

- More generous submission quota. We almost double our submission quota in Phase 2 compared to Phase 2. Specifically, you can now at most make 5 submissions to each track per week.

-

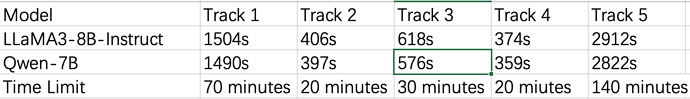

More informed decision of time limits. In Phase 1, the time limit is set to a value that is insufficient for many 7B models (e.g. Mistral). To address that, for the launch of Phase 2, we benchmark two popular models, LLaMA3-8B-Instruct and QWen7B, and accordingly set the time limit. With the support of VLLM, the inference time of the two models, as well as the time limit, are as follows.

We will impose a 10-second limit for a single sample. Although it is shorter than 15s in Phase 1, given that the average per sample time is only ~0.2s, it is highly unlikely to use >10s for a sample.

Phase 2 is currently live and accepting submissions. We have a new starter-kit available here. Phase 2 will end at 23:55 UTC, July 10, 2024. Looking forward to your ingenious solutions!

Best,

Amazon KDD Cup 2024 Organizers