I got this problem in track-3, but I haven’t even successfully submitted it once. Why I was banned until next week, Could you help solve this?

`Submission failed : The participant has no submission slots remaining for today. Please wait until 2024-04-05 16:00:52 UTC to make your next submission.`

I don’t know if it is caused by my behavior? I noticed a submission blocked so I closed that issue (I think it can kill a process) , I wanna know if it is the right way to kill a submission and why there is no submission slots for me.

Hi,

Once a submission is made, its quota is used up, and you cannot cancel it to reclaim the quota.

Also, we limit 2 submissions to Track 3 per week. So you probably have to wait until next week.

However, my submissions have all failed, I think the failed tasks should not be counted for limitation. This is not conducive to trying to familiarize ourselves with the platform and debug

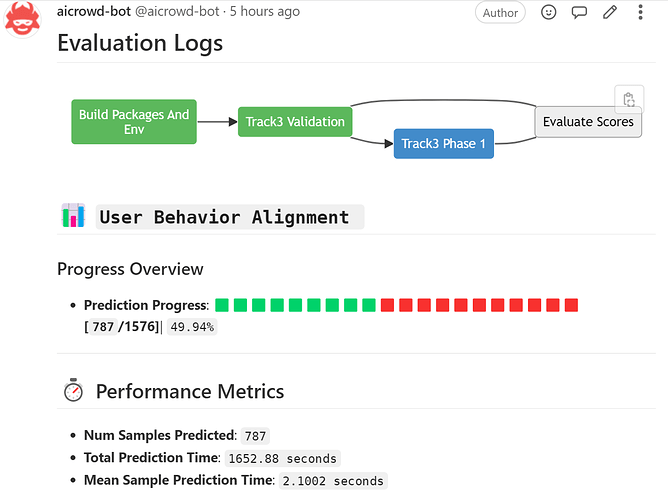

I strongly agree with that. To be honesty, my team spend most of time dealing with environmental problems because there always has some packages that we can install but the platform cannot and tell us the version cannot be found. There was only one “successful” submission, which is killed in validation and raise AssertionError: Timeout while making a prediction. We try to enhance the quantization and submit again however there is no submission slots. I think this is depressing because we do well in our computer but we spend too much time on things that don’t work.

@chicken_li @kehan_yin We well understand your concerns. However, it is also a matter of fact, that we have to provision GPU resources even for your failed submissions. This includes building your submission (which should be around tens of minutes), which is not a very small number, and we cannot afford an unlimited amount of failure submissions.

Here are some tips that we can provide.

- Use the

Dockerfile (and the docker_run.sh ) provided in the starter kit to test whether the Docker can be built locally.

- I think if your submission can be built for one track, it will also work for other tracks. We have 4 failures for each track per week, which I think should be enough for you to find out working solutions.

I attempted to execute the identical code on two separate occasions, altering only the baseline model to Mistral-7B, without making any other adjustments to the code. Both times, the process timed out without producing any errors. However, according to the overview documentation, Mistral-7B should operate smoothly on this machine." I have tried 3 times to submit my code, another one encountered an environmental error, Many teams face these problems, Could you please consider removing the limitation on failed submissions for participating teams until they have achieved their first successful submission on each track?

Replied in another thread.

We just made a small test, and found out that Mistral inference is slower than Vicuna, which may indeed lead to timeout. The “smoothly” is considered in terms of GPU memory but not the overall time limit. We will revise that.

Also, regarding the failed submissions, we are considering giving a more generous failure quota, and are also working on analyzing existing errors, which should help. In the meantime, you can consider submitting to other tracks to see whether your solution go through the time limit.

Thanks for your reply, I just got a failure another time 10 minutes ago. In this submission, I just submitted the baseline without any modification. I honestly hope you could give more failure limits for about 420 teams focusing on exploring and solving the challenges themselves.