Please note that the Task-1 Public Test set (v0.2 release) contains some overlapping samples from the Task-2 Training set (v0.2 release).

If the systems are using an integrated training set from all the tasks, the participants will need to be conscious that their system might be “memorizing” the training data and getting artificially high results on the Task-1 Public dataset.

While we allow the training data from all tasks to be used for training any task, we want to emphasize that re-using or memorizing the labels of training data in another task is not advisable. It should be noted that there will not be any such overlap in the Private Test set (which will be used to determine the final winners and the rankings on the leaderboard for that task).

Since the goal of this task is to build a general query-product ranking/classification models, it will be beneficial for the participants to use only the training sets given for each task separately when building their systems or evaluate the performance of their systems without using any overlapping data.

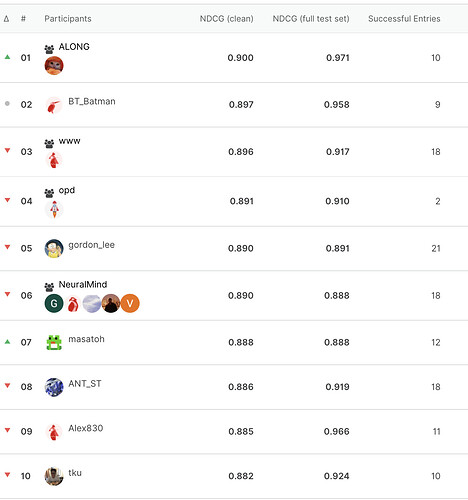

With this in mind, starting from today onwards, we will also be showing another column (same performance metric as before) on the leaderboard excluding any overlapping test data. This should help the participants understand the generalization ability of their systems.

The score computed on the complete Public Test set will be available under the NDCG (full test set) column, and the score computed on the subset of the Public Test set (with the overlapping test data removed), will be available under the NDCG (clean) column. The leaderboards will be sorted using the NDCG (clean).

![]() Leaderboard snippet (ranks at the time of posting it)

Leaderboard snippet (ranks at the time of posting it)