How to know why my submission failed?

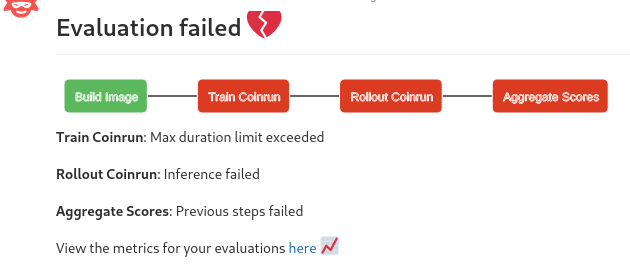

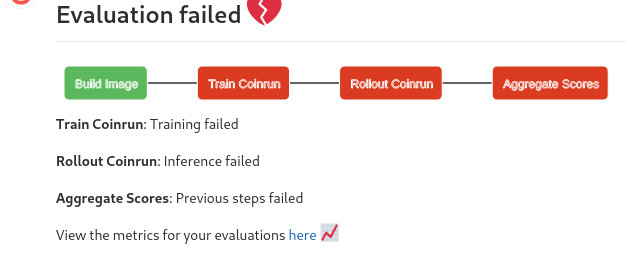

When things go south, we try our best to provide you with the most relevant message on the GitLab issue page. They look somewhat like these.

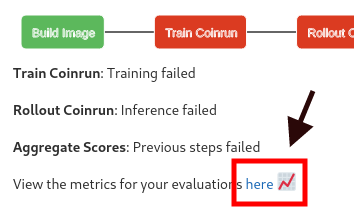

Well, “Training failed” is not of much use. No worries! We got you covered. You can click on the Dashboard link on the issues page.

Scroll a bit down. You should find a pane that displays the logs emitted by your training code.

Note: We do not provide the logs for rollouts on the dashboard to avoid data leaks. We will provide the relevant logs for the rollouts upon tagging us.

Common errors faced

Error says I’m requesting x/1.0 GPUs where x > 1

Make sure that num_gpus + (num_workers+1)*num_gpus_per_worker is always <= 1.

My submission often times out

A low throughput can be due to various reasons. Checking the following parameters is a good starting point,

Adjust the rollout workers and number of gym environments each worker should sample from. These values should be good initials.

num_workers: 6

num_envs_per_worker: 20

Make sure your training worker uses GPU.

num_gpus: 0.6

Make sure that your rollout workers use a GPU.

num_gpus_per_worker: 0.05

Note: rllib does not allocate the specified amount of GPU memory to the workers. For example, having num_gpus: 0.5 does not mean that half of the GPU memory is allocated to the training process. These parameters are very useful in a scenario where one has multiple GPUs. These parameters will be used by rllib to figure out which worker goes on which GPU. Since the evaluations run on a single GPU, setting num_gpus and num_gpus_per_worker to a nominal non-zero positive value should suffice. For more information on the precise tuning of these parameters, refer.

Figuring out the right values for num_gpus and num_gpus_per_worker

You can run nvidia-smi on your machine when you start the training locally. It should report how much memory each of the workers is taking on your GPU. You can expect them to take more or less the same amount of memory during the evaluation. For example, say you are using num_workers: 6 for traning locally. The output for nvidia-smi should look similar to this.

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 440.64.00 Driver Version: 440.64.00 CUDA Version: 10.2 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 GeForce GTX 208... Off | 00000000:03:00.0 Off | N/A |

| 43% 36C P2 205W / 250W | 6648MiB / 11178MiB | 90% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| 0 16802 C ray::RolloutWorker.sample() 819MiB |

| 0 16803 C ray::PPO.train() 5010MiB |

| 0 16808 C ray::RolloutWorker.sample() 819MiB |

| 0 16811 C ray::RolloutWorker.sample() 819MiB |

| 0 16813 C ray::RolloutWorker.sample() 819MiB |

| 0 16831 C ray::RolloutWorker.sample() 819MiB |

| 0 16834 C ray::RolloutWorker.sample() 819MiB |

+-----------------------------------------------------------------------------+

From this output, I know that a single rollout worker is taking around 819 MB and the trainer is taking around 5010 MB of GPU memory. The evaluations run on Tesla P100 which has 16 GB memory. So, I would set num_workers to 12. The GPU usage during the evaluation should roughly be

5010 MB + 819 MB *(12+1) = 15.3 GB

Note: The above values are dummy values. Please do not use these values when making a submission.

Run your code locally to avoid wasting your submission quota

We assume that you made necessary changes in run.sh.

Make sure that your training phase runs fine

./run.sh --train

Make sure that the rollouts work.

./run.sh --rollout

If you are using a "docker_build": true without modifying the dockerfile but to install py packages from requirements.txt,

- Create a virtual environment using

conda/virtualenv/python3 -m venv - Activate your new environment.

- Run

pip instal -r requirements.txt. - Run

./run.sh --train. - Run

./run.sh --rollout.

In case you are using a completely new docker image, please build on top of the Dockerfile provided on the starter kit. You are free to choose a different base image, however, you need to make sure that all the packages that we initially were installing are still available. To avoid using failures in the docker build step, we recommend that you try running it before making a submission.

docker build .

This might take quite a while the frist time you run it. But it will be blazing fast from next time!

Not able to figure out what went wrong? Just tag @jyotish / @shivam on your issues page. We will help you!