when I use the upload button to upload my checkpoint, I found this happening.

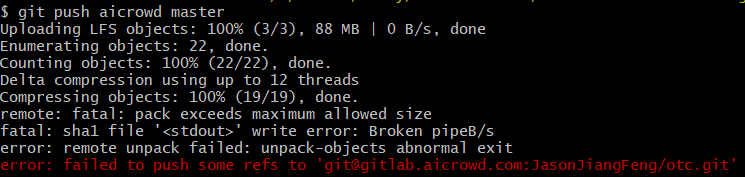

And when I use the git LFS to upload, an error shows that I can’t upload too.

remote: fatal: pack exceeds maximum allowed size

fatal: sha1 file '<stdout>' write error: Broken pipes

error: remote unpack failed: unpack-objects abnormal exit

it seems that the max size of the repo is 50MB and we cannot change the size since we are not authorized.

@JasonJiangFeng: For larger files, you will have to use git-lfs .

More information about the same is available at : https://docs.gitlab.com/ee/workflow/lfs/manage_large_binaries_with_git_lfs.html

We used git-lfs before, And same error occur, when writing file larger than 50MB.

Possibly, you have some of the larger files in the git history.

You will have to move all the large binaries in the git history to lfs using git lfs migrate : https://manpages.debian.org/unstable/git-lfs/git-lfs-migrate.1.en.html

But this is the first time we upload the checkpoint file which just size 83MB. There is no possible the large binaries exist in git history.

The maximum allowed file size is 10Mb. Anything file bigger than that, has to be moved to lfs. Can you confirm that is the case ?

I have used the LFS to upload the big files, but the error still happened like this.