Nice job and insights!

Could you reveal which models you used for final score? Was it some visual transformer or just tricky convolutions? It seems people here are not so talkative (comparing to Kaggle)

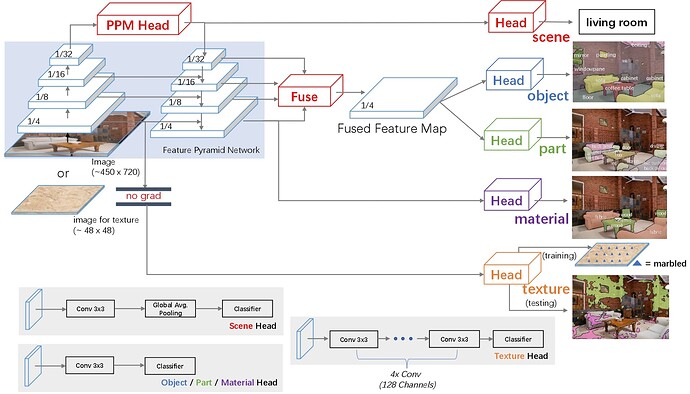

Personally, I used 6 fine-tuned FPN with standard augs (flips, crops, resize, etc.) and mixed loss: CE + Dice

Segmentation: single model - upernet-convnext from mmsegmentation library (pytorch) using fp16 in order to maximize the batch on collab pro single gpu. Lovasz Loss.

Depth: ensemble of 3 models in tensorflow 1.6 (I ran into issues of compatibility with tf) using a deeplabv3+ model with Xception backbone and a unet with EfficientNet backbones (pytorch was most likely a better choice but I ran out of time to test on this competition). Loss formula was approximated from the pytorch code provided by the host.

Thanks!

As I understand UperNet made use of FPN under the hood in more optimized manner? So this is the reason we had almost identical scores