i find server maybe errors in the first 10 examples.

“interaction_id”: “7b267839-848a-409c-b74e-33ce86d3f5a6”,

“query_time”: “03/21/2024, 23:51:43 PT”,

“domain”: “music”,

“question_type”: “simple”,

“static_or_dynamic”: “static”,

“query”: “what’s the date of after 7’s last song/album?”,

“answer”: “1997-03-11”,

“alternative_answers”: [],

there is no information like 1997-03-11 in refs.

“interaction_id”: “dcf34e25-e5a1-4afb-8233-e357fdc1ed97”,

“query_time”: “03/21/2024, 23:45:52 PT”,

“domain”: “music”,

“question_type”: “simple”,

“static_or_dynamic”: “static”,

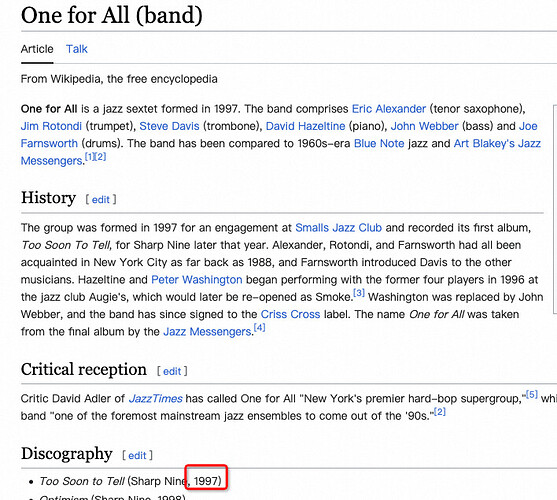

“query”: “when did one for all start performing together?”,

“answer”: “1993”,

“alternative_answers”: [],

the answer is 1997?

“interaction_id”: “b0f8ce10-4511-4f1b-9cf3-29d1c63c99e5”,

“query_time”: “03/17/2024, 16:50:22 PT”,

“domain”: “finance”,

“question_type”: “set”,

“static_or_dynamic”: “static”,

“query”: “what are the top 3 tech stocks that rise in value in january 2024”,

“answer”: “top 3 tech stocks that rise in value in january 2024 are nvidia, oracle and netflix”,

“alternative_answers”: [],

The refs mentioned a lot of stocks and not only the three.

“interaction_id”: “6ad1e71e-273c-4433-b671-c3beacefd3ad”,

“domain”: “movie”,

“question_type”: “simple”,

“static_or_dynamic”: “slow-changing”,

“query”: “is the original dialogue of louis, martin & michael different?”,

“answer”: “en”,

“alternative_answers”: [],

“interaction_id”: “7bb29eb4-12f9-45f9-bf8a-66832b3c8962”,

“query_time”: “03/10/2024, 23:19:21 PT”,

“domain”: “sports”,

“question_type”: “post-processing”,

“static_or_dynamic”: “static”,

“query”: “how many 3-point attempts did steve nash average per game in seasons he made the 50-40-90 club?”,

“answer”: “4 3-points attempts per game”,

4 3-points attempts per game not mentioned in refs

“interaction_id”: “68261da9-bf1a-4675-b0b8-ffd0c2f0c4c9”,

“query_time”: “03/19/2024, 23:50:28 PT”,

“domain”: “movie”,

“question_type”: “simple”,

“static_or_dynamic”: “static”,

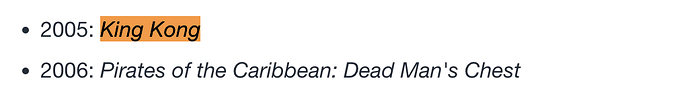

“query”: “which movie won the oscar best visual effects in 2006?”,

“answer”: “king kong”,

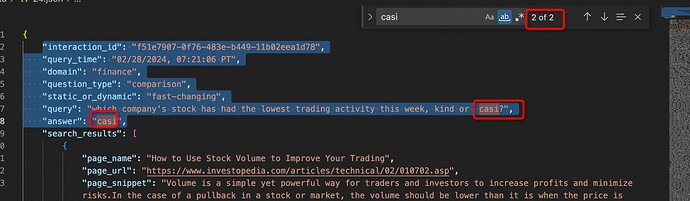

“interaction_id”: “f51e7907-0f76-483e-b449-11b02eea1d78”,

“query_time”: “02/28/2024, 07:21:06 PT”,

“domain”: “finance”,

“question_type”: “comparison”,

“static_or_dynamic”: “fast-changing”,

“query”: “which company’s stock has had the lowest trading activity this week, kind or casi?”,

“answer”: “casi”,

casi doesn’t appear in the reference at all!

“interaction_id”: “642bbc21-ed9d-42e7-8455-382a6f2b0f08”,

“query_time”: “03/17/2024, 16:55:12 PT”,

“domain”: “sports”,

“question_type”: “simple_w_condition”,

“static_or_dynamic”: “static”,

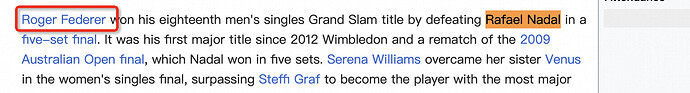

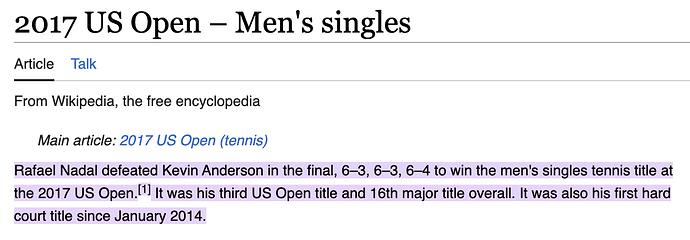

“query”: “which player took home grand slam championship in 2017?”,

“answer”: “rafael nadal won his 16th grand slam title at the 2017 u.s. open”,

“search_results”: [

it is Roger Federer not Rafael Nadal i

I am worried that such poor data quality can really ensure the normal running of the game. ![]()

At least on this one, the Oscar’s are a bit weird and this may be where some ambiguities creep in. When the Oscar’s are hosted, they are giving awards for shows in the previous year. So while King Kong was released in 2005, it was awarded the Oscar in 2006. From the Oscar’s website:

The 78th Academy Awards | 2006

Kodak Theatre at Hollywood & Highland Center

Sunday, March 5, 2006

Honoring movies released in 2005

However, I agree with your analysis in other areas and have run into a variety of instances where manually inspecting the evidence provided for the query indicates that either the answer they provide is incorrect or I cannot find in the evidence where they are able to retrieve the correct answer.

Hi @jjplane and @mitchelldehaven ,

Thank you for the discussion! A few clarification:

- Web pages are not the only source of reference, there is also the Mock API.

- Not all questions are guaranteed to find answer in the provided reference (web pages and Mock API), just like what RAG systems can face in a practical context. In such cases, the best answer would be “I don’t know”.

- V3 data has been released and some of those errors are fixed there.

Among the selected examples:

b0f8ce10-4511-4f1b-9cf3-29d1c63c99e5 is asking for top 3.

6ad1e71e-273c-4433-b671-c3beacefd3ad is fixed in V3.

68261da9-bf1a-4675-b0b8-ffd0c2f0c4c9 is correct just as @mitchelldehaven mentioned, King Kong is produced in 2005. However, it won the award in 2006.

642bbc21-ed9d-42e7-8455-382a6f2b0f08 is also correct (please see ref attached).

7b267839-848a-409c-b74e-33ce86d3f5a6 and dcf34e25-e5a1-4afb-8233-e357fdc1ed97 seem to be real errors. We will fix them in future release. Thank you for reporting those.

The CRAG Team

642bbc21-ed9d-42e7-8455-382a6f2b0f08,if grand slam championship mean one of the four competitions,the answer should contains serverl names instead of random pick one as ground truth.

- Australian Open 2017

- Men’s Singles: Roger Federer

- Women’s Singles: Serena Williams

- French Open 2017 (Roland Garros)

- Men’s Singles: Rafael Nadal

- Women’s Singles: Jeļena Ostapenko

- Wimbledon 2017

- Men’s Singles: Roger Federer

- Women’s Singles: Garbiñe Muguruza

- US Open 2017

- Men’s Singles: Rafael Nadal

- Women’s Singles: Sloane Stephens