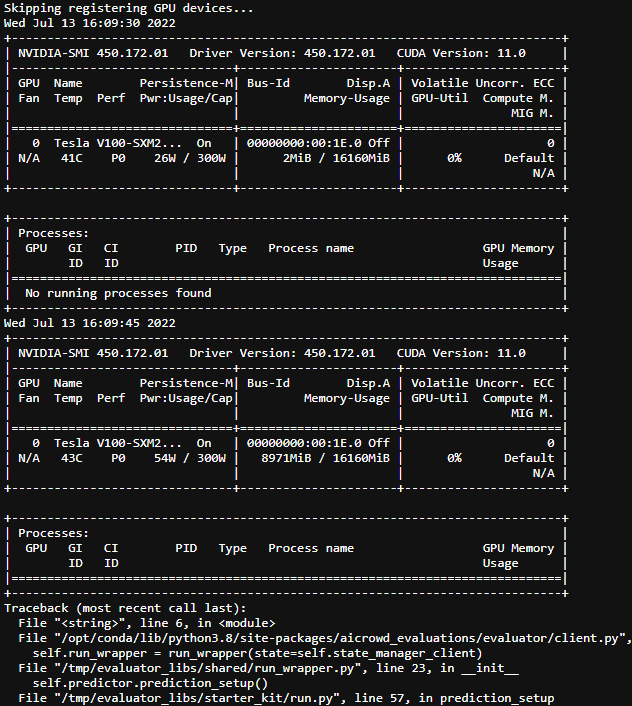

Hi @shivam and @mohanty. To debug this, I did the following: I’m printing nvidia-smi on my prediction_setup method, right before loading my model and it seems it gets executed 2 times, and that’s why I’m getting this CUDA out of memory error.

The first time it loads correctly but it can’t load a second time without releasing GPU’s RAM.

Any idea why it’s loading 2x?

Hi @vitor_amancio_jerony, thanks for the logs.

We have identified the bug during the evaluation phase which caused the models to load twice, and is now fixed.

We have also restarted your latest submission and monitoring if any similar error happens to it.

i passed all process for task2

but i am not see my result on leaderboards, any issues?

here is submission:

AIcrowd Submission Received #194023 - initial-19

submission_hash : 2cbf126dab5610c3c6b108cfb7e40028e59e5106.

Hi @wac81,

We do see this submission on the leaderboard of Task-2. Maybe you were checking the leaderboard of a different task ?

Best,

Mohanty

yeah,this is my second submission which is show up, and i can’t first submission.

but it’s ok.

Hi @wac81, the leaderboard contains the best submission from each participants (not all the submissions). I assume that may have caused the confusion.

You can see all your submission here (they are merged for all the tasks in this challenge, but you can identify them using relevant repository name):

https://www.aicrowd.com/challenges/esci-challenge-for-improving-product-search/submissions?my_submissions=true

Best,

Shivam

Hi, @shivam and @mohanty

my last two submissions failed and the debug logs disappeared (i.e. link to the log is not displayed on the page), but debug logs before them are available. Could you take a look at it?

submission_hash : [bbf64209f663e4dac1dbddde443a9a7495a836f1 ]( Files · bbf64209f663e4dac1dbddde443a9a7495a836f1 · caimanjing / task_1_query-product_ranking_code_starter_kit · GitLab (aicrowd.com)).

submission_hash : [efe0e19058c344b2b29e76387fd51497233d89d7 ]( Files · efe0e19058c344b2b29e76387fd51497233d89d7 · caimanjing / task_1_query-product_ranking_code_starter_kit · GitLab (aicrowd.com)).

I just made a submission, but I don’t know why it has the same code as the last one, and their submission hashs are completely the same. Could you please help me cancel the second submission with this hash? I don’t want it to take up my submission chance. The name is #194735 - 7.16.2.

submission_hash : 75fcd642d1b8659786cc66b7a77fa621d1fda1a0.

hi @shivam and @mohanty, my submissions were failed and no logs were given again. All of them were stopped at 90 mins.

69047cec6f7f9fc2fc18a265895418972c8ae86b

527acda520e4d5221a8aa6b18574b0a50c6430ed

f18d54966cd711a22032d32ab09923264e235832

Hi @dami,

We have re-run these submissions along with the fix for your issue.

It should pass automatically or will show relevant logs in case of failure now on.

My submission issue has not updated for a long time. Can you please take a look? Thank you.

submission_hash : 52602f246d1f15bc286ef4a49296df666d441560.

During the last day, I had some submissions waiting in the queue for hours.

Could you provide more machine resources to ensure that our submissions start running as soon as possible?

Best,xuange

Hi, @mohanty,

Our two submissions were labeled as failed, but these submission passed both public test and private test (also displayed as “graded” in submission page).

Is it actually failed or successfully evaluated?

submission_hash : ca0b6377179a5db5c1dfc8dd643231c305129d5f

d490d3d132583404e03bc25fe6b05dde7d6d9e85

@K1-O : Those evaluations have succeeded indeed. The labels on the gitlab issue also have been corrected.

If the issue occurs again, please do not worry about it, if the status for your public_test_phase and private_test_phase are marked as Success 🎉, then it is correctly evaluated and is being counted towards the final leaderboard.

Best of luck,

Mohanty

Our submissions failed and it was shown :

The node was low on resource: ephemeral-storage. Container wait was using 604Ki, which exceeds its request of 0.

The node was low on resource: ephemeral-storage. Container wait was using 572Ki, which exceeds its request of 0.

7f3992ae72fc997b3a557feaac818551eaad6d07

803f3b831f65cd03b8e0af7005ab780229a80004

@mohanty @shivam could u please help us fix this problem? Thx!

And we had a few submissions stopped at exactly 3hours during environment initialization period and here are examples:

68d14a16c1c08e58357350a9a5655a83936b0c7c.

fe38c589a9d99d0e558e494ea56547f888b0c573

810111ac200182ad2d6255e1c6c03545b0a59589

@mohanty @shivam ![]()

Hi @mohanty,

In the past few days we have submitted many times but almost all of them were stopped in the environment setup period. We have tried rebuilding a new repository, cleaning all history models files but didn’t solve the problem and all submissions still stopped accidentally after THREE HOURS.

We are so helpless to fix it since it was not because of our core code. Could you please check our historical issues?

Our latest repo: AIcrowd

@dami: Across all the submissions you mention, the image builder consistently runs out of memory because of the large number of models that you have included in your submission. Just the docker image itself is ~50GB halfway through the image build process, before image builders run out of memory and stop the evaluation unfortunately !

We can discuss with the Amazon team, if these submissions need to be separately evaluated after providing the image builders more storage, but I believe they would not be inclined to the idea, as it might be unfair towards other participants.