Multilayer Model

1.Introduction

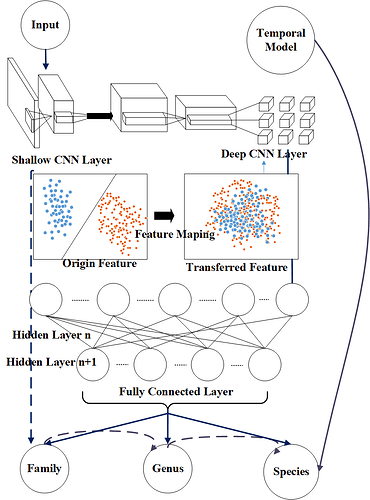

As classification in creature is more and more complicate(like Family to Species) , According to [1] the convolutional neural network(CNN) also extract coarse information in the shallow layer and extract specially detail in more deep layers. So I propose a multilayer model that considering the family and genus label from meta_data.

here’s what I thought.

The shallow model will be initialized by coarse label like family and genus. Then I will connect these shallow cnn layer with deep cnn layer to predict the target. Considering the feature space of family,genus,genus is different, I recommend use some transfer method to map feature in a similar distribution.

In addition usually We use STFT(Short Time Fourier Transform) to get spectrum , limiting to the fixed window size, STFT cannot completely reflect high-frequency and low-frequency information at the same time. So I think it is may useful encoder the temporal data into the model. However I don’t do relative work.

2.Experiment

2.1 Pytorch: accelerate training by prefetching data

Reference:tianws.github

LIMITATION: MUST CHECK YOUR GPU OCCUPANCY

if your GPU occupancy occur a periodic loop from low to high, it is your io speed limits your training. So it will be useful by doing prefetching.

Requires

prefetch_generator

INSTALL: pip install prefetch_generator

# make sure designated workers always have at least 1 additional data item loaded

# Uing DataLoaderX inplace of torch.utils.data.DataLoader

class DataLoaderX(DataLoader):

def __iter__(self):

return BackgroundGenerator(super().__iter__())

# Defining your own dataset

class YourOwndataset(torch.utils.data.Dataset)

....

....

# Defining a class to get the batch data

class DataPrefetcher():

# if you want to make forward propagation and loading data working in parallel

# SHOULD MAKE A NEW CUDA STREAM

def __init__(self, loader, opt='cuda'):

self.loader = iter(loader)

self.opt = opt

self.stream = torch.cuda.Stream()

self.preload()

def preload(self):

try:

self.batch = next(self.loader)

except StopIteration:

# it will end automatically when full dataset loaded

self.batch = None

return

with torch.cuda.stream(self.stream):

for k in self.batch:

if k != 'meta':

self.batch[k] = self.batch[k].to(device=self.opt, non_blocking=True)

def next(self):

torch.cuda.current_stream().wait_stream(self.stream)

batch = self.batch

self.preload()

return batch

#---------------------------------------------------------------------------------------#

# Get your batch

prefetcher = DataPrefetcher(YourOwndataset,opt)

batch = prefetcher.next()

2.2 Model and Result

Using MobileNet[3] as basic model.

Here’s my basic accuracy result, the experiment is finished in June, back to that time I didn’t know that accuracy actually can give as much information as I think, so the result is not completed. However I do not want to continue this program cause I choose different work in my master , the most important reason is that I cannot finish this work by alone

| Dataset&Model | basic model | weight Updated | weight Fixed |

|---|---|---|---|

| Three Genus | 42.2% | 43.4% | 39.2% |

| Ten Genus | 31.2% | 32.9% | 33.1% |

Anyway Let’s see the result, I proposed two way which is updating the shallow layer weight and fixing the shallow layer weight respectively. We can see there is improvement though all the accuracy is not good enough.

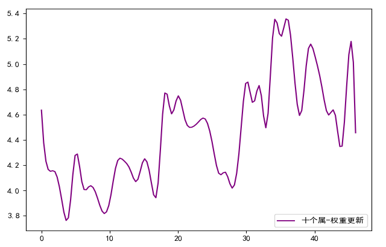

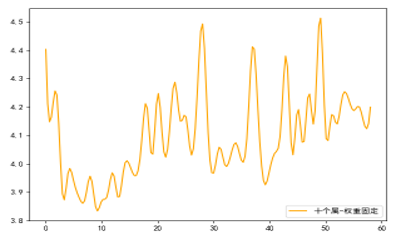

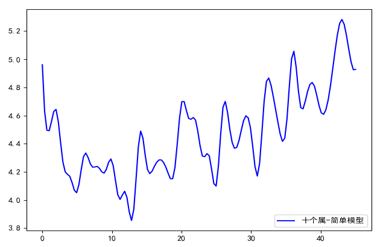

And here’s training fig of ten genus bird dataset.

Fig: basic model(up) weight updated model(center) weight fixed model(down), Cross-entropy Loss training schemes. It shows model cannot convex well(I don’t know why) but from the peak values may the weight fixed model get a function like regularization.

3 Summary

No question there is a lot of bugs to fix. But this is my undergraduation design. Hope someone will get a better idea from this. Following are some opinion I think should be done.

Work

- Use bigger dataset

- Fix the overfitting problem

- Using transfer learning to make the combination between shallow and deep model more better(robust?).

Target

- Using mutilayer model to fix the data unbalanced problem(trained by normal species, predict rare species)

Reference

- [1] Zeiler M D, Fergus R. Visualizing and understanding convolutional networks[C]//European conference on computer vision. Springer, Cham, 2014: 818-833.

- [3] Howard A G, Zhu M, Chen B, et al. Mobilenets: Efficient convolutional neural networks for mobile vision applications[J]. arXiv preprint arXiv:1704.04861, 2017.