change your username for “bordeleau_michael” …

@ashivani

I agree with @konstantin_nikolayev. I think it is better to evaluate the submissions by more precise log loss score instead of rounded one.

In this competition, to improve the log loss by 0.000x (it may be ignored if rounded to the 3rd decimal) requires some effort.

if scores are rounded, it is more likely that the difference between better solution and others is just when it is created rather than what it is.

Though 0.000x improvement may not be so important for practical use, I think it is better to take the difference into consideration to make this competition fair.

@ashivani, one more thing from me.

Could you please confirm that probing of LB and usage of true answers in submissions is prohibited and such submissions will be disqualified at the final leaderboard.

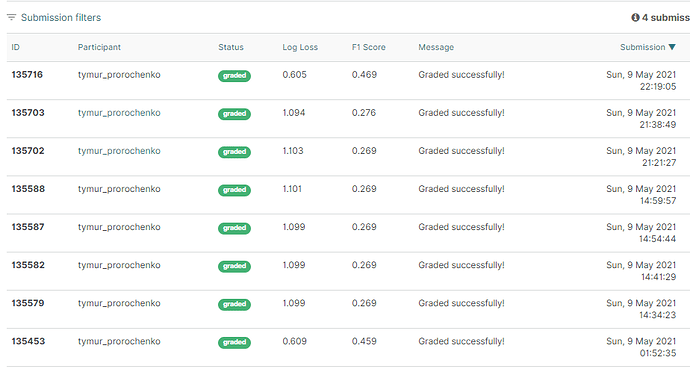

This question occured from this picture (look at screenshot below).

This behaviour of participant looks like situation I described above.

While in theory, you can probe the Leaderboard and seek the true answers, unless I’m missing something, this can’t replicate over the final leaderboard.

i.e:

Let’s say I find out the true answers for record 1 to 25 on the Public Leaderboard. It boosts my public leaderboard position.

But, there’s no way for me to tell, on the final evaluation, which record is which in order to feed the correct answer. No?

Also, I’d be careful before calling out other participants like this. I think there are legitimate trial and errors that you can do. But if you are right, staff will notice by the structure of his code.

Anyways, just my opinion.

Trust your work, and keep in mind overfitters will fail the final leaderboard.

@michael_bordeleau Final test score will be computed on the complete test datasets, so if you prob in the pb lb then it will add little advantage.

Public test contributes 60% to final score - so the advantage could be sizable

Hi all,

The leaderboard has been updated and reflect the condition in the rule. For the rounding of the precision to the 3rd decimal place, we are in touch with the ADDI team, and will post an update soon.

Note: The date mentioned in the leaderboard is of the last submission by the participant and not the submission which has the highest score.

Regards

Ayush

@siddharth_singh8

I’m aware that the public lb is a subset of the final lb.

What I’m saying is that I don’t see any way to actually take advantage of it for your final score. You seem to think so… How would you apply it?

Perhaps I’m not seeing it… Methods I can think of would break on the final evaluation.

We are monitoring the situation on leaderboard and will take a decision on rounding of log-loss scores and tie-breaks very soon

@michael_bordeleau, what do you actually mean by final evaluation?

I guess, that there will not be rerun of submissions on the whole test because they are already run on whole test and now you see your score on 60% of test. As indirect proof of it (that submissions are run on whole test and not only on the part of it) look at my screenshot in the message above. Participant got the same as initial score 1.0986122886681096 twice after that. Why? Probably because he hit his probes into private rows two times (I’m definitely sure that it was probing).

So I don’t expect any rerun in the future and advantage of true answers (probing result) will remain at the Final LB. Anyway, just my opinion.

Ah! I understand you now!

I assumed our notebooks would be re-run on the final (complete) dataset.

You are saying that whenever we submit, our final score is also calculated behind the scene, but then we are shown a sub-score, based on 60% of the rows.

If that’s true… then yeah. I see the issue now.

EDIT: also terrifying is that it hasn’t been addressed yet…

Can I submit constant predictions instead of ML model results? I think yes.

Can I submit different constant predictions for each row of the test dataset? I think yes. It not prohibited by rules.

Can I submit everything that I want in my 10 submissions per day? I think yes.

- Could you please clarify it?

- Could you please confirm that the final score will be calculated on 100% of the Test including this 60% that we can see on the leaderboard?

Hi All,

Few contestants have raised the issue of leaderboard probing and ADDI wants make its stance clear on this issue. Here is the official comment:

“Per Section 10 of the Challenge Rules, ADDI has determined that leaderboard probing undermines the legitimate operation of the Contest and is considered an unfair practice. Therefore, any Entrants engaging in leaderboard probing will be disqualified, in accordance with the Challenge Rules and at ADDI’s sole discretion.”

Hello everyone, please tag me if you are referring to my submissions, it is hard to keep track of all the forum.

I want to raise a point, that most ML competitions are very far from production-grade code, and are focused on a single goal - optimize scoring metric (if they overfit, do not generalize, etc - this is not a problem for participants if the goal is achieved). The host on their hand gets all the interesting ideas that were generated during the competition.

If an organizer wants to prevent leakage, and make the competition fairer, steps are done in advance by designing the competition itself. You can’t just leave a private target somewhere and say “guys don’t use it, it’s against rules”.

So it is very strange to see a sentence “Entrants engaging in leaderboard probing will be disqualified”, when most of the participants submitted “all 0, all 1 scores” to figure out public class distribution. Leaderboard probing is a part of the competition, people fine-tune models, class balancing, and probabilities post-processing when you use the public score as feedback is a probing. Could someone please give a robust definition of probing?

Why do we need scoring on the 100% of test data, when it creates that many problems?

add: overall I believe that it would be great if clear rules are established (that are reasonable for participants, aicrowd, and the host), and we compete within these rules. As opposed to “everyone interprets rules as they want, the host decides if winners are eligible in the end”.

I find it very interesting that you are rationalizing your behavior and also blurring the line between something that, in my mind, is pretty obvious.

Personally, I see this narrow definition of probing: if one was to identify the true label for specific rows from the test set, and then adjust its predictions accordingly. This has zero merit on replicability.

The competition is set to advance the science behind Alzheimer detection.

Rationalizing that host will get all the interesting ideas from others is not an excuse for one to abuse the flaws of the competition. How can it make sense to award the top prize to a model that has no merit?

Also, blaming the organizers for having designed a competition with a flaw is astonishing.

Not everything is perfect right from the start.

Just see how much debugging has occurred since the launch.

AIcrowd staff is working hard, and readjusting things when needed.

Flaws are uncovered, tweaks are made, clarification statements are posted, fixes are applied.

The previous competition I took part in was continuously getting better as staff made changes throughout the competition, by listening to its participants.

Here, once again, the participants have raised a point, and action was taken.

You request further clarification, that’s fair. We will wait for the response as well.

Congrats @tymur_prorochenko on that significant jump to 0.597 on the public leaderboard.

Quite a leap!

Hopefully, this is a legit submission.

Hi All

Keeping all the queries and concerns raised by the participants in mind, we have concluded that only 40% of the private data, whose score is not visible on the leaderboard, will be used for the final ranking.

Thank you all for raising the concern and helping us improve

Do not worry, I also root for an honest competition  I’ve sent my thoughts on how the current competition design may be cheated and how it could be improved. I believe that scoring on the private data only is a great step and solves some of the problems. Let’s see if other improvements will come as well.

I’ve sent my thoughts on how the current competition design may be cheated and how it could be improved. I believe that scoring on the private data only is a great step and solves some of the problems. Let’s see if other improvements will come as well.

Many thanks for your clarification. It makes sense to split test data into public set and private set. However I still have few questions.

Is it legitimate to probe the LB to get more ground truth to train models? Could we exploit the leaderboard feedbacks to potentially improve the score - public and/or private ?

Or is it still depending on the ADDI to decide which LB probing actions are accepted after the competition?

Cheers,

Hi,

Our stance on leaderboard probing is the same. We consider it an unfair practice and it can lead to disqualification