TL:DR: We have improved the way agent actions are resolved in Flatland, by fixing corner cases where trains had to leave an empty cell between each others. This new way to handle actions is the new standard, and will be used for Round 2.

Many of you are aware that Flatland agents cannot follow each other close behind, unless they are in agent index order, ie Agent 1 can follow Agent 0, but Agent 0 cannot follow Agent 1, unless it leaves a gap of one cell.

We have now provided an update which removes this restriction. It’s currently in the master branch of the Flatland repository. It means that agents (moving at the same speed) can now always follow each other without leaving a gap.

Why is this a big deal? Or even a deal?

Many of the OR solutions took advantage of it to send agents in the “correct” index order so that they could make better use of the available space, but we believe it’s harder for RL solutions to do the same.

Think of a chain of agents, in random order, moving in the same direction. For any adjacent pair of agents, there’s a 0.5 chance that it is in index order, ie index(A) < index(B) where A is in front of B. So roughly half the adjacent pairs will need to leave a gap and half won’t, and the chain of agents will typically be one-third empty space. By removing the restriction, we can keep the agents close together and so move up to 50% more agents through a junction or segment of rail in the same number of steps.

What difference does it make in practice?

We have run a few tests and it does seem to slightly increase the training performance of existing RL models.

Does the order not matter at all now?

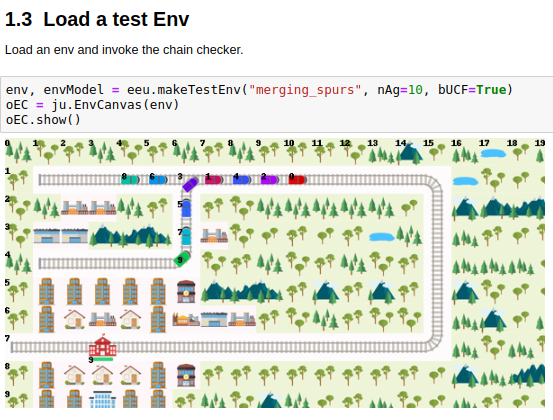

Well, yes, a bit. We are still using index order to resolve conflicts between two agents trying to move into the same spot, for example, head-on collisions, or agents “merging” at junctions.

This sounds boring. Is there anything interesting about it at all?

Thanks for reading this far… It was quite interesting to implement. Think of a chain of moving agents in reverse index order. The env.step() iterates them from the back of the chain (lowest index) to the front, so when it gets to the front agent, it’s already processed all the others. Now suppose the front agent has decided to stop, or is blocked. The env needs to propagate that back through the chain of agents, and none of them can in fact move. You can see how this might get a bit more complicated with “trees” of merging agents etc. And how do we identify a chain at all?

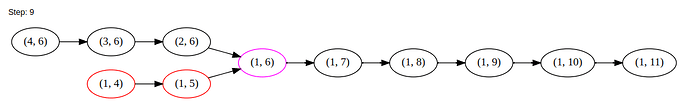

We did it by storing an agent’s position as a graph node, and a movement as a directed edge, using the NetworkX graph library. We create an empty graph for each step, and add the agents into the graph in order, using their (row, column) location for the node. Stationary agents get a self-loop. Agents in an adjacent chain naturally get “connected up”. We then use some NetworkX algorithms:

-

weakly_connected_componentsto find the chains. -

selfloop_edgesto find the stopped agents -

dfs_postorder_nodesto traverse a chain -

simple_cyclesto find agents colliding head-on

We can also display a NetworkX graph very simply, but neatly, using GraphViz (see below).

Does it run faster / slower?

It seems to make almost no difference to the speed.

How do you handle agents entering the env / spawning?

For an agent in state READY_TO_DEPART we use a dummy cell of (-1, agent_id) . This means that if several agents try to start in the same step, the agent with the lowest index will get to start first.

Thanks to @hagrid67 for implementing this improved movement handling!

)

)