@shravankoninti Can you please send the issue link? Or just tag me in the issue.

https://gitlab.aicrowd.com/shravankoninti/dsai-challenge-solution/issues/47

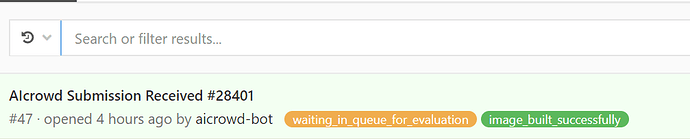

AIcrowd Submission Received #28401

https://gitlab.aicrowd.com/shravankoninti/dsai-challenge-solution/issues/46

AIcrowd Submission Received #28354

Please reply me asap.

Hi @shravankoninti,

The pipeline was blocked due to failed submissions which didn’t terminate with non-zero exit code. We have cleared the pipeline and adding a fix now so it don’t happen again.

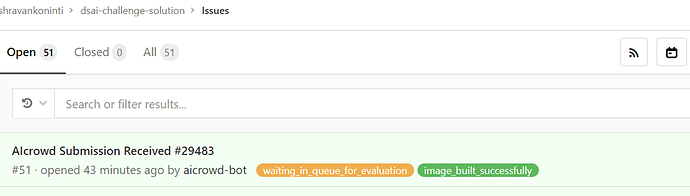

There are ~12+(6 running) submissions in queue due to stuck pipeline and getting cleared right now. Your submission will be evaluated shortly. https://www.aicrowd.com/challenges/novartis-dsai-challenge/submissions

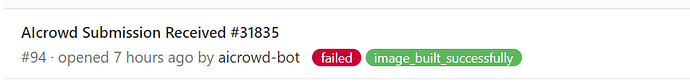

Can you please check why this error is coming.

i have done close to 80 submissions - did not get this type of error of row_ids not matching.

Did it happen becuase you changed the test data path and test file? Please let me know

as of now I have test rows as 6947 . Am I correct with this? Let me know why this error has come asap.

Hi @shravankoninti,

Yes this condition is added with newer version of evaluator that is using the updated splitting announced here. I am looking into this and will keep you updated here.

@shivam Thanks very much.

Question:

Will be we able to submit predictions during holidays time? I meant from today to Jan 10th? Does Aridhia provides workspace?

Yes, I think workspaces will be available to you. Please go through the announcement made by Nick here. UPDATE / EXTENSION: DSAI Challenge: Leaderboard & Presentation deadlines

Hi @shravankoninti,

This issue is resolved now, and your above submission have latest feedback i.e. newer dataset. Meanwhile other submissions by you and other participants are still in queue and being re-evaluated right now.

We are getting the same error.Any updates on this?

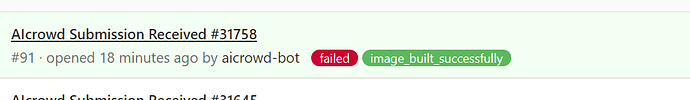

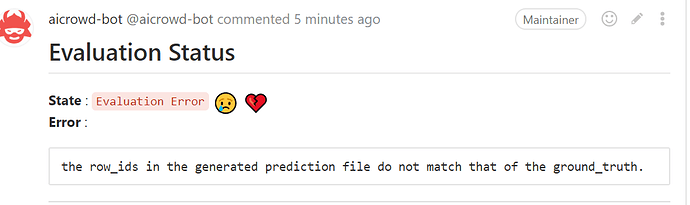

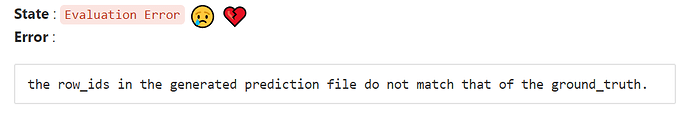

Hi everyone, please make sure that your submissions are creating prediction file with correct row_id. The row_id was not being match strictly till the previous evaluator version and we have added assert for the same now. Due to which the submissions have failed with the row_ids in the generated prediction file do not match that of the ground_truth.

Your solution need to output row_id as shared in the test data and not hardcoded / sequential (0,1,2…). Also note, that row_id can be different on data present on evaluations v/s workspace, to make sure people aren’t hardcoding from that file.

We are trying to apply automatic patch wherever possible, but it need to be ultimately fixed in solutions submitted.

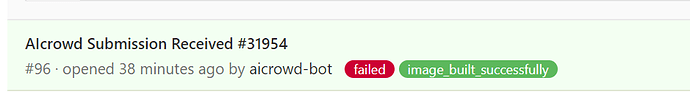

I submitted again now. It is throwing the same error. I think you need to recheck from your side only on how you are testing out.

From my side I have questions on test data - please answer these.

- Total number of rows = 6,947 ??

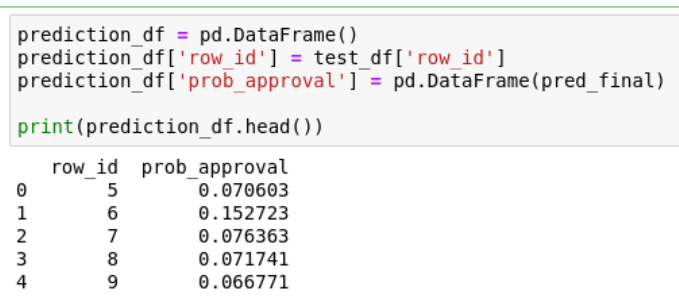

- The column headers are a) ‘row_id’ and b) ‘prob_approval’

- The test file path is correct?

- Is my final prediction output code is correct??

-

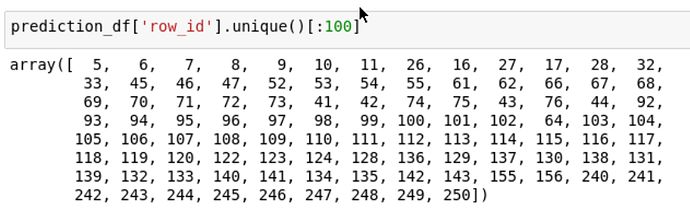

Please check above row_id numbers if they are consistent with your file.

-

Please see top 100 row ids from my prediction output

If everything is fine from above. Then my output file is correct. You need to check from your side.

This is really frustrating to see failed submission error. Please troubleshoot and let me know if any issues from my side. Please note that I have already done ~90 submissions - never I got these errors.

Regards

Shravan

Hi Shravan,

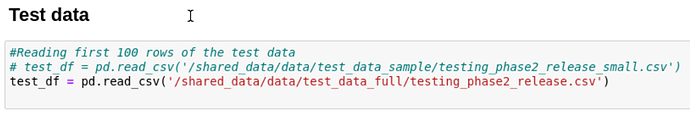

It looks like you have uploaded your prediction file i.e. lgbm.csv and directly dumping to the output path. We want your prediction model to run on the server itself and not prediction files to be submitted as solution. Due to which your submission is failed.

The row_id i.e. 5,6,7 in test data provided to you can be anything say 1000123, 1001010, 100001 (and in random order) in test data present on the server going forward, so we know predictions are being carried out during evaluation.