Hey could you please help with submission number 112392, i’m getting the error “failed with exit code 1” and i’m unsure how to diagnose the issue based on this.

Hi,

I am encountering the same issue and my submission ID is 112398. Please help.

Thanks!

Hey there

I thought I managed to solve it by following these instructions from the sticky:

# 3 Using additional libraries in R colab

I struggled to correctly load additional libraries. In the end I worked out a solution by looking at the zip code submission method and the python colab notebook.

So, for R users wishing to use additional libraries like data.table and xgboost then as per the instructions add the install.packages(“data.table”) to the install_packages function. The part that is unclear is where do you add the call to load the libraries?

I’ve found that I had to add it to the beginning fit_model function call. For good measure I also added them to the predict_expected_claim and preprocess_X_data which I suspect is unnecessary but does no harm. I’ll come back and update when I confirm.

[EDIT: I’ve tried putting the library calls in the fit model function only but that caused submission errors. So until we get guidance from admins I’m sticking with adding them to all the key functions as above.]

However as of submission 112407 I’m getting the error again and unsure which function its from (since the colab notebook works fine)

Edit: could the admins please share the logs against submission 112407 with me? I can try and diagnose the issue and post here if this is something general

Hi @nopenope

(great username  )

)

I’ve looked through your submission and I believe you’ve been using a version of the notebook from early in week 1 (when the package import issue was unclear). Can you please make sure to use the updated version of the notebook that is available on the same link? I believe that will resolve the confusion by the introduction of the global_imports function.

In this case you need only fill in that function with whatever library calls you need anywhere in the notebook and we will then accordingly make those calls when your model is run, including your preprocessing and everything.

Having said that I can see that in your submission you are treating vh_make_model as a factor without other preprocessing. This will give rise to two issues:

- You might time out as this column has thousands of categories in it effectively creating a very very very large dataset in memory.

- You will run into an error where the leaderboard data has a different number of columns (i.e. new car models that you haven’t seen before) than your training data.

So you should have a way to handle new or previously unseen categories of cars in your training and pre-processing

Lastly, there seems to be a package installation issue as well which leads to the error you are noting and that one I am looking into fixing globally, once that is done I will update this thread here

Hi @alfarzan

Thanks for the response! I have updated the notebook with global_imports but still facing the same issue (submission 112536). Will wait on your update.

Agreed on vh_make_model, it wasn’t a part of the model though.

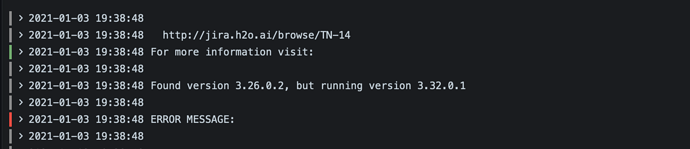

Perfect, I’ve looked a bit deeper into the traceback and I think the issue is the package version with theh2o package.

Specifically this is part of the traceback from a previous submission of yours.

So in essence your model was saved with an older version of h2o which was incompatible with the more recent version we had, so when specifying package installation, can you specify the version to be compatible with this? I believe that should solve the issue.

In the interim. we will find a way to give more clear tracebacks to participants.

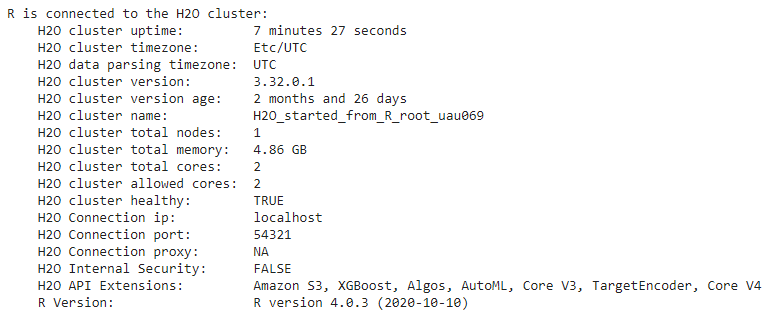

Thanks for checking on this Ali. Is this the trackback on submission 112536? Below is the screenshot from the colab where the version matches yours

Hi @nopenope

Just responding to let you know we’re still investigating the exit code 1 errors and we’ll have a better solution for them shortly.

Stay tuned

Thanks @alfarzan much appreciated. Substituting with other libraries will take a while so I’d rather just wait on your fix. Do you think I can make week 3 submissions (even if there is limited time on it)?

Yes I think you should be able to, but I suggest making a slightly weaker submission in case it’s not ready in time

Hi @alfarzan ,

First thank you for all the organization, super cool!

I have the same issues, submission 113458, while 113419 is still evaluating …

Thank you for the support,

Lorentzo

Hello @lorentzo

The submission logs are available on the submission page from now. Hope this helps in identifying the problem.

Hi @nopenope

You should have logs available for submissions now if you visit the submission link. Have a look and see if you van figure out the issue? If not then we will look into it

(if it’s not available yet it will be still generating)

@jyotish super useful feature, thanks!

just looked at the error, i have the folloing:

Traceback (most recent call last):

File “predict.py”, line 33, in

import model

File “/home/aicrowd/model.py”, line 9, in

import xgboost as xgb

File “/usr/local/lib/python3.8/site-packages/xgboost/init.py”, line 9, in

from .core import DMatrix, DeviceQuantileDMatrix, Booster

File “/usr/local/lib/python3.8/site-packages/xgboost/core.py”, line 173, in

_LIB = _load_lib()

File “/usr/local/lib/python3.8/site-packages/xgboost/core.py”, line 156, in _load_lib

raise XGBoostError(

xgboost.core.XGBoostError: XGBoost Library (libxgboost.so) could not be loaded.

Likely causes:

- OpenMP runtime is not installed (vcomp140.dll or libgomp-1.dll for Windows, libomp.dylib for Mac OSX, libgomp.so for Linux and other UNIX-like OSes). Mac OSX users: Run

brew install libompto install OpenMP runtime. - You are running 32-bit Python on a 64-bit OS

Error message(s): [‘libgomp.so.1: cannot open shared object file: No such file or directory’]

it seems like i cannot use xgboost, OpenMP is not installed, however the package installation is flauless:

Collecting xgboost==1.2.0

Downloading xgboost-1.2.0-py3-none-manylinux2010_x86_64.whl (148.9 MB)

Installing collected packages: certifi, joblib, numpy, six, python-dateutil, pytz, pandas, scipy, threadpoolctl, scikit-learn, xgboost

Successfully installed certifi-2020.6.20 joblib-0.16.0 numpy-1.17.4 pandas-1.1.2 python-dateutil-2.8.1 pytz-2020.1 scikit-learn-0.23.2 scipy-1.5.2 six-1.15.0 threadpoolctl-2.1.0 xgboost-1.2.0

do you have any hints?

Thank you,

Lorenzo

It is a great feature

@lorentzo I believe you can install it in the docker image yourself by adding: libomp-dev into an apt.txt file and uploading it in your zip submission. (@jyotish correct me if I’m wrong please  )

)

We will look at installing it globally as well.

For more on how to install apt packages see this thread.