Dear participants,

Thank you for your efforts in participating the competition! As we monitor recent submissions and user inquiries, we have several things to note, so that you can avoid unnecessary errors.

-

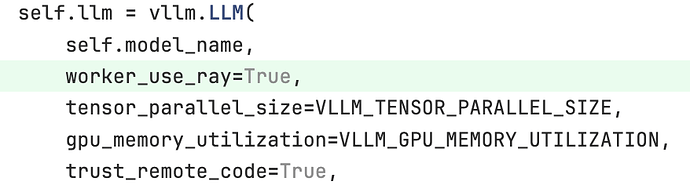

Use Ray backend for vLLM. If you use

mp, you may encounter unwanted errors. You can specify the backend like this.

- Cleaning up your git repo. Sometimes we get evaluation timeouts simply because your repo is too large, and you did not remove unwanted model checkpoints. For example, our current time limit for the validation stage is 40 minutes, which includes downloading your repo, preparing the GPU server (~10 minutes), and get the model to inference (which should be really fast). So if you are submitting a repo with ~100GB size, and the download speed is 100MB/s, it would take ~20 minutes to download. If you have additional older checkpoints, this could cause timeouts.

For cleaning up the repo and getting rid of unwanted model checkpoints, this post may be helpful.

We will consider increasing the quota for submission slightly later. Stay tuned!

Best,

Amazon KDD Cup 2024 Organizers.