The purpose of this thread is to gather questions about details of the Flatland environment (RailEnv). Ask here if you have any doubt about what happens at intersections, what is the precise way malfunctions occurs, etc.

Hi, if the agent will have malfunction in next step, and I give him an action to do now, it must do it or not?

I mean does the next malfunction affect the current action? In another words, does the environment try to malfunction the agent before it obeys the actions I give to it or after? I believe logically, it must do the actions with current state of malfunction (before change the state of malfunction), but I have an error in my tests, and I can’t find any suggestion other than this.

By which action can I change the status of an agent from READY_TO_DEPART to ACTIVE. Another thing, is it automatically removed from scene when it arrives to its destination or must I do some action?

Maybe, I can find the answers if I investigate the libraries, but honestly I am not so good in python (and it’s easier if someone knows and tells us the answers).

Hello @MasterScrat, I’ve recently set up the environment and I’m working with manual rail generator. Is there a way in which we can manually fix the sources and destinations of the agents? Or, if we can know the starting and ending positions of the agents from the (manually) generated environment? Many thanks.

Hi Zain

In the RailEnv.step FLATland checks whether there is a mal function for given agent. If so the agent can not move. See the comment in the rail_env.py:

# Induce malfunction before we do a step, thus a broken agent can't move in this step

self._break_agent(agent)

When an agent is not yet active (READY_TO_DEPART) and the agent’s apply action MOVE_LEFT,MOVE_RIGHT,MOVE_FORWARD it become active and the agent is set to its initial position but only if the cell is empty

Adrian

Hi

I don’t exactly know what the manual generator is doint - but if you put an agent into the environment you just need to set the agent status: READY_TO_DEPART and as well its position = None. The agent is now located outside of the environment. Thus you have to further set the initial_position to the entry position. Means where the agent will entring the world.

The ending position is the agent’s target. Where the agent can be automatically removed or not. In the challenge setup it will automatically removed. RailEnv(…, remove_agents_at_target = True) -> remove_agents_at_target is set to true thus it reqeust the env to remove agents at target. It will be done as soon as the agent reached its target.

Hello!

I noticed that there the environment has a remove_agents_at_target parameter that defaults to True . Can we assume that this will always be True during evaluation?

Hi,

I’m confused about the specification of the evaluation environment as specified here https://flatland.aicrowd.com/getting-started/environment-configurations.html. In particular does malfunction_duration = [20,50] specify the min/max of the malfunction_duration? But it contradicts the values for the min_malfunction_interval in the table below. And what is the malfunction_rate?

Could you please clarify? Thanks.

Hey @shining_spring,

Indeed, malfunction_duration = [20,50] specifies the min/max of the malfunction_duration. This value is the same for all Round 1 environments.

min_malfunction_interval is the minimal interval between malfunctions.

The malfunction_rate is the invert of the malfunction interval. So the malfunction rate will be at most 1.0 / min_malfunction_interval.

Hi, I’m trying to parallelise the RL training by running multiple episodes simultaneously. Do you know if other people have successfully tried this out? Something is going wrong for me - the environment does not seem to be creating independent instances of itself. My threads appear to be writing to each other’s memory locations.

That’s weird! How are you parallelising it? We use dozens of environments in parallel in the RLlib baselines: https://flatland.aicrowd.com/research/baselines.html

Thanks for the prompt response! It turned out to be a joblib version issue. I had an older version installed, which was mismanaging the object references.

Dear @MasterScrat, do the test_envs files contain the malfucntion and agents with different speed? I use rail_from_file, schedule_from_file and malfunction_from_file to load the grid map, schedule and malfunction then pass them to get the RailEnv(). Somehow the malfucntions for agents I can see is always 0 and the speeds of agents are always 1. But during navigation, I can see some train with status ACTIVE doesn’t require action (malfunction == 0, speed == 1.0). Do you know what could be the issue? Is there anything wrong with what I have done?

Hey @beibei,

do the test_envs files contain the malfucntion and agents with different speed

Yes. Although, in this challenge, all the trains have a speed of 1.0.

I use

rail_from_file,schedule_from_fileandmalfunction_from_fileto load the grid map, schedule and malfunction then pass them to get the RailEnv().

Sounds good, you shouldn’t need anything else.

But during navigation, I can see some train with status ACTIVE doesn’t require action (malfunction == 0, speed == 1.0). Do you know what could be the issue?

Can you render some frames in such situations to see what may be happening?

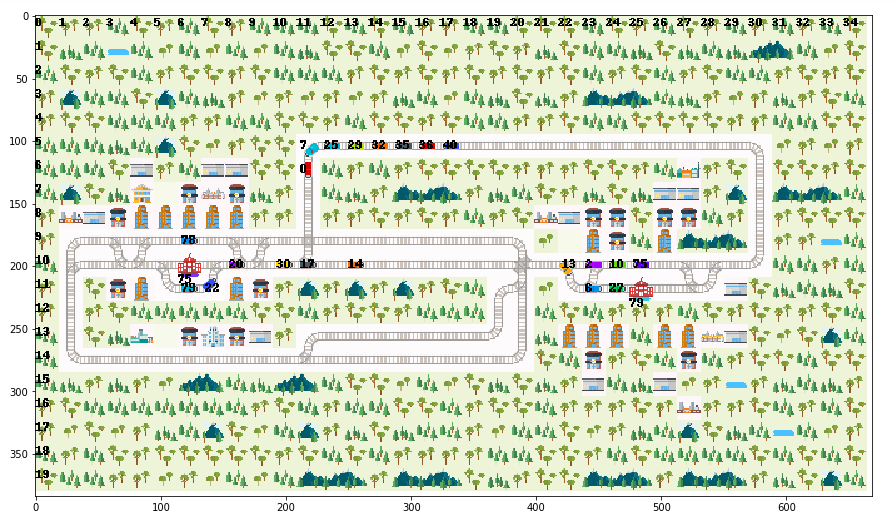

Hi @MasterScrat, I am not sure if the picture helps since I could not find a better way to describe my question.

The situation I found is very interesting to me: in the picture,

agent 2 in (10, 23) wants to go (11, 23). agent 6 in (11, 23) wants to go (11, 24). I made mistake to first let agent 6 go forward then agent 2 turn left in the same timestep/episode. Since flatland env takes agent 2’s action first, of course agent 2 can not turn left. So after this timestep/episode, agent 2 stays in (10, 23) but then I get the info from env.step() which shows for agent 2, malfucntion is 0 and action required is False.

Is this some special settings in Flatland environment?

@beibei remember the default rendering displays the agents one-step behind. This is because it needs to know both the current and next cell to get the angle of the agent correct. (Or maybe you’ve switched to a different “AgentRenderVariant”, I don’t know)

With unordered close following (UCF) the index of the agents shouldn’t matter unless two agents are both trying to enter the same cell, in which case the lower index agent wins.

So ignoring the other agents (27?) if:

- 6 is trying to move (11,23)->(11,24) and

- 2 is trying to move (10,23)->(11,23)

…then that looks like a UCF situation; it should work out that 2 is vacating the cell which 6 is entering, and I think it should work.

OTOH it’s possible that 2’s action to turn left came too late. I think 2 should say “MOVE_LEFT” (action 1) when it’s in (10,24), just before it moves into (10,23).

Maybe you’ve resolved this one already

Hi @MasterScrat,

I have small question regarding cells which allow more than 2 transitions. If, for example, cell has N-N, S-S and E-E, W-W transitions, will agents from W and N be able to pass through the cell simultaneously? Or it works like switch, and not more than one agent can be at the cell at each time point?

A cell can never contain more than one agent!

Great, thank you for the response!

I am very sorry for the inconvenience, but there was another thing that is not totally clear. If agent tries to move into cell which is occupied by another agent who does not move for any reason, nothing will happen, right? I mean, there are no “collisions” in the environment which break agent or something, and agent will be able to move as soon as cell in front becomes free again?