@radhika_chhabra Can you check my comment on your latest submissions’s gitlab issue page.

Hi @dipam,

I was looking at my code to see if I have any randomness. However, when I try to run it locally multiple times I am getting similar runtime (maybe 100/200 ms is different but it is acceptable).

What I observe is that when I submit my solution the deviations get increased, at least what I see in the product validation step.

For example:

210756: validation step takes 9.2s

210897: validation step takes 7.8s

Are the experiments run on Kubernetes Cluster? If so, I was thinking maybe the resources allocated to the pod/node are not the same. Maybe one pod/node gets better hardware than the other one (heterogeneous kubernetes cluster, container performance difference - Stack Overflow). Then I guess having a 10min run time might be unfair for all the experiments.

Here are some examples that might help us/me to debug, these examples use exactly the same models. However, they are trained on different training configurations or different epochs of the same model.

Example 1:

Passed: 210329, 210321, 209969, 209617

Failed: 210701, 210710

Example 2:

Passed: 209881

Failed: 210897

How much time did the passed examples take? Is there a noticeable runtime difference? For the failed example how many percent of the test dataset is run?

Thank you for your time.

Hi @hca97

Thank you for the insightful and extensive testing.

Indeed the experiments are run with Kubernetes, however we explicitly provision on-demand AWS g4dn.xlarge (for the GPU nodes) for all the submissions. I understand that this may allow for variations in the quality of CPU or memory that AWS has attached to the nodes. However this is the only way we can run the competition.

As for the time limit, the Machine Can See organizers have explicitly asked for the 10 minute limit, as they want the models to fast. So participants have to add provisions in their code to track the time spent, or ensure that the code has enough buffer to allow for the allegedly slower runs to complete.

Hi @dipam. Could you send me the errors for error for this submission?

211540

Also, I can mention that in my last 4 failed submissions, 3 were because of aicrowd platform.

- 2x Build-Env failed, when nth was changed in dependences (try in next day succeeded)

- 1x Product MatchingValidation time-out where in logs it not even started

Not sure if AiCrowd has changed sth or if it was just errors coming from Cloud Provider

I’ve added the error in the gitlab comments.

About the failed builds with no changes to dependencies. The only time this has occurred is that the repository has too many models which kills the Docker build, not sure if it’s also the case here, I’ll check further. If it is indeed the cause, unfortunately for now you’ll have to reduce the overall repository size somehow.

For the one with time-out but it didn’t start, can you give me the submission id?

Hi,

If the git repo is too large maybe you ignore the .git folder when building the docker image.

Here is the list of submissions with weird errors:

- 211521 (env failed)

- 211522 (env failed)

- 211387 (Product Matching validation time-out, but I don’t see logs for loading the model)

Also, could you also check out what happened here?

- 211640 (I think the error is on my side)

@dipam can you please let me know what went wrong here, It says inference failed and there’s nothing in the logs, submission:211733, thanks!

@dipam Could you provide more info about submissions #212367 and #212368, please?

Both are Product Matching: Inference failed

@dipam, could you please check

#212567

#212566

It is failed in step “Build Packages And Env”, however I have changed only NN params.

As to me it is very strange…

@dipam Could you check submission: 213161?

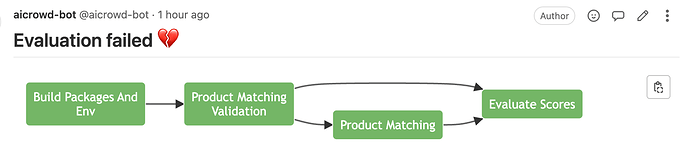

The diagram shows that everything worked fine, but the status is failed: