We have filled out the form but have not received a confirmation email. Could you please confirm that it has been received? @yilun_jin

Hi @yilun_jin , could you please help notify aicrowd folks to reevaluate these issues?

https://gitlab.aicrowd.com/pp/amazon-kdd-cup-2024/-/issues/178

https://gitlab.aicrowd.com/pp/amazon-kdd-cup-2024/-/issues/189

https://gitlab.aicrowd.com/pp/amazon-kdd-cup-2024/-/issues/179

https://gitlab.aicrowd.com/pp/amazon-kdd-cup-2024/-/issues/197

https://gitlab.aicrowd.com/pp/amazon-kdd-cup-2024/-/issues/198

https://gitlab.aicrowd.com/pp/amazon-kdd-cup-2024/-/issues/205

https://gitlab.aicrowd.com/pp/amazon-kdd-cup-2024/-/issues/200

https://gitlab.aicrowd.com/pp/amazon-kdd-cup-2024/-/issues/195

And forgive me to reconfirm that it is ok not to fill in the form, because top2 performance submission will be automatically retested

[quote=“boren, post:25, topic:10686”]

Hi @yilun_jin ,Could you please help notify aicrowd folks to reevaluate these issues?

https://gitlab.aicrowd.com/boren/amazon-kdd-cup-2024-starter-kit/-/issues/420

https://gitlab.aicrowd.com/boren/amazon-kdd-cup-2024-starter-kit/-/issues/418

https://gitlab.aicrowd.com/boren/amazon-kdd-cup-2024-starter-kit/-/issues/416

https://gitlab.aicrowd.com/boren/amazon-kdd-cup-2024-starter-kit/-/issues/415

https://gitlab.aicrowd.com/boren/amazon-kdd-cup-2024-starter-kit/-/issues/386

https://gitlab.aicrowd.com/boren/amazon-kdd-cup-2024-starter-kit/-/issues/385

https://gitlab.aicrowd.com/boren/amazon-kdd-cup-2024-starter-kit/-/issues/390

@yilun_jin Thank you for your excellent organization. I have a question could a team be notified which submissions are selected in the final testing stage?

Hi @yilun_jin

- When will you publish Final Leaderboard?

We will write paper if our team will be ranked within 5th in per track. - What happens if there is more than one person of the same rank?(5th member is all passed paper or not?)

I will have to ask aicrowd folks about this. They managed the sheets.

- We aim for Wednesday (i.e. July 17th).

- As the scores are continuous, we do not expect exact ties. However, since top-5 teams in different tracks overlap a lot, we think that there can be some margins to accommodate very close teams.

thank you for reply, I’m looking forward to displaying final result!

Ok, thank you for your reply!

Hi, @yilun_jin thanks a lot for organizing this amazing competition. We really appreciate this chance and the wonderful journey you provide. However, we have a concern regarding the rerun in the final evaluation.

In this discussion page: Note for our final evaluation, we notice that rerun behavior may potentially cause unfairness.

First, all teams experienced submission failures before the end, and network issues were the same for all teams. Participants should consider the impact of network issues in advance because the competition has already provided ample time for participants to experiment to mitigate the impact of network issues.

Second, most of the teams followed the regulations and filled in the IDs for final scoring before the deadline, which means their score was fixed. In contrast, the teams requesting reruns did not submit their IDs, giving them ample room for improvement.

Based on this, we hope the organizers will carefully consider the rerun measure and inform all participants through an official announcement. If reruns are allowed, all participating teams should be notified that they can rerun failed submissions and be given additional time to reorganize their sheets. If reruns are not allowed, we suggest clarifying the current situation in discussions and all reruns after 10th July, 2024 23:55 UTC should not be considered for the final evaluation.

I am unsure how you arrived at this perspective. Firstly, the organizers have already apologized for the network issue, which was not the fault of the participants but rather an issue with AIcrowd. Secondly, the purpose of the competition is to select the best solution. Since one submission has been successfully submitted, it meets the criteria of “All submissions that are made before July 10th, 23:55 UTC are valid submissions that can be considered in the final re-evaluation, regardless of when they finish, and when you finish your Google sheet.” So, why not re-evaluate? LOL ![]() . Just because you give up this right and others use it? Moreover, I have not seen a widespread demand for re-evaluation, and from what I’ve observed, the majority of re-evaluation requests have been ignored by AIcrowd (I just got two re-running among 8 re-evaluation requests). Can I also appeal that this might cause unfairness? After all, the aim of the competition is to select the best performance within the specified time, not the luckiest. I just want to get the result as soon as possible so, don’t make complications.

. Just because you give up this right and others use it? Moreover, I have not seen a widespread demand for re-evaluation, and from what I’ve observed, the majority of re-evaluation requests have been ignored by AIcrowd (I just got two re-running among 8 re-evaluation requests). Can I also appeal that this might cause unfairness? After all, the aim of the competition is to select the best performance within the specified time, not the luckiest. I just want to get the result as soon as possible so, don’t make complications.

Hi @pp1 @Eden_Zixuan_Wang @jin_yi_yao,

I will have to contact aicrowd folks for a complete answer, but I am able to provide the following information.

- We very much apologize for the occasional network issue which we fail to completely solve throughout the competition.

- The re-run was not performed by participants. Instead, it was done on the side of aicrowd, and what they did is to simply resubmit a previous submission (no new stuff). So the ‘giving them ample room for improvement’ is not valid — one can neither make new submissions nor ‘improve’ beyond the deadline.

- We ignore most of the re-run requests after the deadline — considering that keeping the occasional issue as-is would be the fairest way. Even there are re-run submissions, aicrowd folks did that after July 13rd, which is well after the submission selection deadline.

- We only consider sample selection forms that are submitted before July 12th. Beyond that, we will default to top-2 solutions sampled at that time, meaning that re-runs would have a minimal impact on the selection of submissions.

- We cannot satisfy your request that “all reruns after 10th July, 2024 23:55 UTC should not be considered for the final evaluation”. The reason is that teams have the right to select all submissions that are made before July 10th 23:55 UTC, regardless of whether they succeeded or failed, or whether they are re-run.

Based on the above considerations, we will keep the final evaluation process as is. That is, we will consider either the selected submissions or the top-2 submissions at the Google Form deadline.

Hope that helps.

Could you please confirm that our google form arrive smoothly? We didn’t receive any email confirmation but we are sure that we sent it friday before the deadline. Thanks so much.

Thanks for your detailed information.

We did receive. Sorry about the delay (slightly busy with other stuff recently).

No problem, thanks for reply ![]() , it is understandable

, it is understandable

Thank you for your detailed explanation. We fully understand the difficulties in hosting such an international competition, and sincerely hope the competition will continue to improve going forward. ![]()

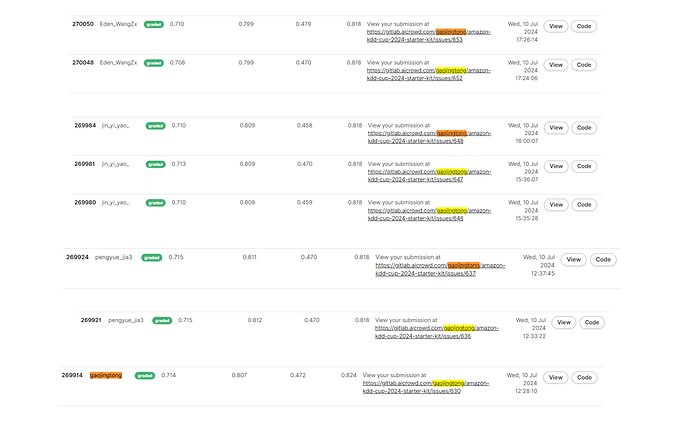

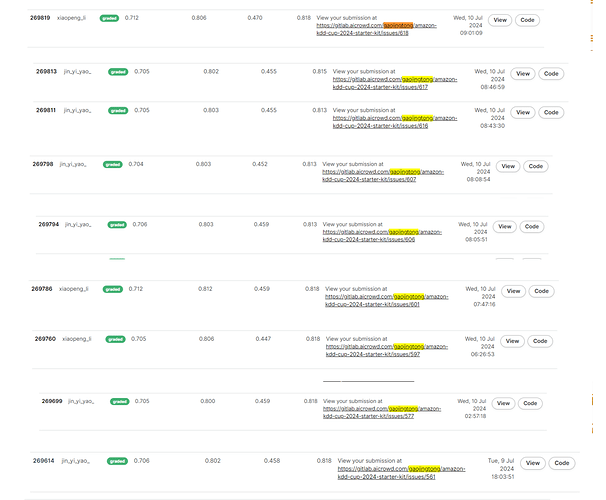

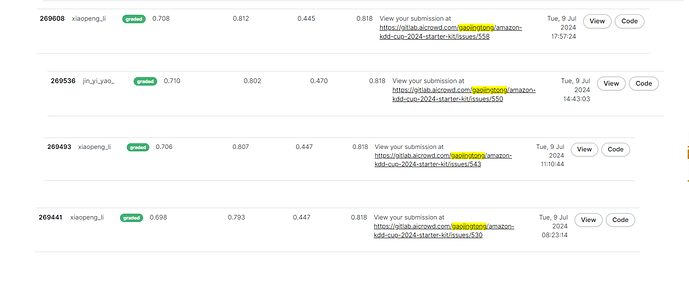

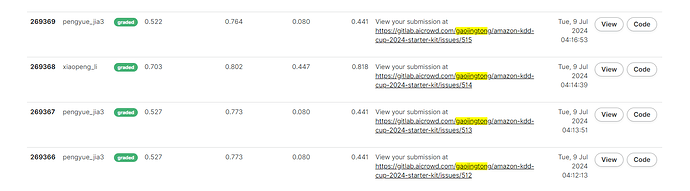

I find it amusing that the AML team complains about the rules when they have uploaded more than 20 predictions in the last week on track 4, as the submissions control only works per user and not per team.

8 valid submissions in track 4.

9 valid submissions in track 4 min date 9 jul

4 valid submissions in track 4 min date 9 jul

3 more valid submissions on track 4

And so on.

I haven’t continued because I don’t care, I know in the end there were enough submissions to try what we wanted which I appreciate. But it makes me curious that team is causing noise when they themselves have uploaded over 20 submissions PER TEAM in the last week.