I was running the colab for generating word vectors for the NLP feature engineering task where I get this error log: https://aicrowd-evaluation-logs.s3.us-west-002.backblazeb2.com/logs/desiml/blitz-9/feature-engineering/17724c17-2a58-4083-84d9-1c3c9766a802.log

It’s unclear to me what the issue is in this case. Any help would be very much appreciated. Thanks!

Our DevOps team is looking into the issue and will let you know about the updates ASAP. Sorry for the inconvenience.

Shubhamai

The error was due to your submission file being big enough to cause a memory error in our evaluation systems. We have increased the memory of our systems so if you now try to re-submit your submission, it should give the score without any issues!

Thank you so much for looking into this and the fix. Trying out now.

This time the validation step ran fine and it stopped during predictions on test data. https://www.aicrowd.com/challenges/ai-blitz-9/problems/nlp-feature-engineering/submissions/146420

Ideally though, as per my understanding if it worked on validation, it should’ve been same process on test data - right?

We are looking into this issue as we speak. I will update you ASAP.

I tried a smaller model and this still fails in the Generate Predictions On Test Data phase. The logs do not point to any particular code failure.

I am getting timeout error due to a cell exceed 30 seconds of execution

Scrubbing API keys from the notebook...

Collecting notebook...

Validating the submission...

Executing install.ipynb...

[NbConvertApp] Converting notebook /content/submission/install.ipynb to notebook

[NbConvertApp] Executing notebook with kernel: python3

[NbConvertApp] ERROR | unhandled iopub msg: colab_request

[NbConvertApp] ERROR | unhandled iopub msg: colab_request

[NbConvertApp] ERROR | unhandled iopub msg: colab_request

[NbConvertApp] ERROR | unhandled iopub msg: colab_request

[NbConvertApp] ERROR | unhandled iopub msg: colab_request

[NbConvertApp] ERROR | unhandled iopub msg: colab_request

[NbConvertApp] ERROR | unhandled iopub msg: colab_request

[NbConvertApp] ERROR | unhandled iopub msg: colab_request

[NbConvertApp] ERROR | unhandled iopub msg: colab_request

[NbConvertApp] Writing 59619 bytes to /content/submission/install.nbconvert.ipynb

Executing predict.ipynb...

[NbConvertApp] Converting notebook /content/submission/predict.ipynb to notebook

[NbConvertApp] Executing notebook with kernel: python3

[NbConvertApp] ERROR | Timeout waiting for execute reply (30s).

Traceback (most recent call last):

File "/usr/local/bin/jupyter-nbconvert", line 8, in <module>

sys.exit(main())

File "/usr/local/lib/python2.7/dist-packages/jupyter_core/application.py", line 267, in launch_instance

return super(JupyterApp, cls).launch_instance(argv=argv, **kwargs)

File "/usr/local/lib/python2.7/dist-packages/traitlets/config/application.py", line 658, in launch_instance

app.start()

File "/usr/local/lib/python2.7/dist-packages/nbconvert/nbconvertapp.py", line 338, in start

self.convert_notebooks()

File "/usr/local/lib/python2.7/dist-packages/nbconvert/nbconvertapp.py", line 508, in convert_notebooks

self.convert_single_notebook(notebook_filename)

File "/usr/local/lib/python2.7/dist-packages/nbconvert/nbconvertapp.py", line 479, in convert_single_notebook

output, resources = self.export_single_notebook(notebook_filename, resources, input_buffer=input_buffer)

File "/usr/local/lib/python2.7/dist-packages/nbconvert/nbconvertapp.py", line 408, in export_single_notebook

output, resources = self.exporter.from_filename(notebook_filename, resources=resources)

File "/usr/local/lib/python2.7/dist-packages/nbconvert/exporters/exporter.py", line 179, in from_filename

return self.from_file(f, resources=resources, **kw)

File "/usr/local/lib/python2.7/dist-packages/nbconvert/exporters/exporter.py", line 197, in from_file

return self.from_notebook_node(nbformat.read(file_stream, as_version=4), resources=resources, **kw)

File "/usr/local/lib/python2.7/dist-packages/nbconvert/exporters/notebook.py", line 32, in from_notebook_node

nb_copy, resources = super(NotebookExporter, self).from_notebook_node(nb, resources, **kw)

File "/usr/local/lib/python2.7/dist-packages/nbconvert/exporters/exporter.py", line 139, in from_notebook_node

nb_copy, resources = self._preprocess(nb_copy, resources)

File "/usr/local/lib/python2.7/dist-packages/nbconvert/exporters/exporter.py", line 316, in _preprocess

nbc, resc = preprocessor(nbc, resc)

File "/usr/local/lib/python2.7/dist-packages/nbconvert/preprocessors/base.py", line 47, in __call__

return self.preprocess(nb, resources)

File "/usr/local/lib/python2.7/dist-packages/nbconvert/preprocessors/execute.py", line 381, in preprocess

nb, resources = super(ExecutePreprocessor, self).preprocess(nb, resources)

File "/usr/local/lib/python2.7/dist-packages/nbconvert/preprocessors/base.py", line 69, in preprocess

nb.cells[index], resources = self.preprocess_cell(cell, resources, index)

File "/usr/local/lib/python2.7/dist-packages/nbconvert/preprocessors/execute.py", line 414, in preprocess_cell

reply, outputs = self.run_cell(cell, cell_index)

File "/usr/local/lib/python2.7/dist-packages/nbconvert/preprocessors/execute.py", line 491, in run_cell

exec_reply = self._wait_for_reply(parent_msg_id, cell)

File "/usr/local/lib/python2.7/dist-packages/nbconvert/preprocessors/execute.py", line 483, in _wait_for_reply

raise TimeoutError("Cell execution timed out")

RuntimeError: Cell execution timed out

Local Evaluation Error Error: predict.ipynb failed to executeThe timeout error occurs even with a dummy submission that output a fixed value for all rows, after just read the data :-/

How much time does it take to generate the predictions for 10 samples?

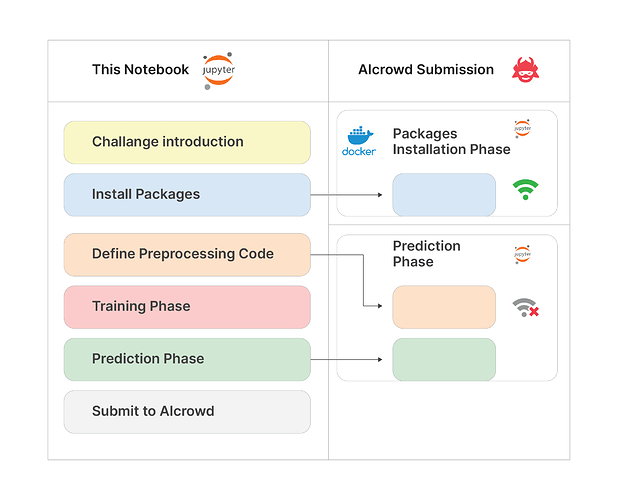

Also, after looking at the submission logs of your latest submission, It looks like that the error was caused due to a model wasn’t able to download due to no internet connection.

- Make sure that you download any necessary model files in the Install packages

, because Install packages

, because Install packages  is the only markdown section where you will get the internet connection to download any files.

is the only markdown section where you will get the internet connection to download any files. - And if you did finetune your model, make sure to save your model in the

assetsfolder in the Training phase , so that you can read your model back in Prediction phase

, so that you can read your model back in Prediction phase  from the same

from the same assetsfolder path.

I hope this helps  Let me know if you have any other issues/doubts.

Let me know if you have any other issues/doubts.

Thank you.

I did what you suggest and the error changed… Now it seems the submission cant write data to disk…

Scrubbing API keys from the notebook...

cp: cannot create regular file '/data/test.zip': Read-only file system

[NbConvertApp] Converting notebook predict.ipynb to notebook

[NbConvertApp] Executing notebook with kernel: python

2021-06-21 01:33:09.576002: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory

2021-06-21 01:33:09.576037: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

[NbConvertApp] Writing 816144 bytes to predict.nbconvert.ipynb

Also, can you change the system to not count a submission when it fails?

Tks

I checked the logs and it looks like the model was giving the errors on certain samples, below was the error

RuntimeError: The size of tensor a (552) must match the size of tensor b (512) at non-singleton dimension 1

You can try fixing the issue by adding try/except block.

For the submission to not count when it fails, we have added a 10 failed submissions limit  meaning that you can have almost 10 fails submissions for a day.

meaning that you can have almost 10 fails submissions for a day.