rank0: Traceback (most recent call last):

rank0: File “<private_file>”, line 211, in

rank0: raise exc

rank0: File “<private_file>”, line 196, in

rank0: File “<private_file>”, line 189, in main

rank0: File “<private_file>”, line 176, in serve

rank0: File “<private_file>”, line 195, in run_agent

rank0: raw_response, status, message = self.process_request(

rank0: File “<private_file>”, line 101, in process_request

rank0: “data”: self.route_agent_request(

rank0: File “<private_file>”, line 131, in route_agent_request

rank0: return self.execute(target_attribute, *args, **kwargs)

rank0: File “<private_file>”, line 144, in execute

rank0: return method(*args, **kwargs)

rank0: File “<private_file>”, line 124, in batch_generate_response

rank0: return run_with_timeout(

rank0: File “<private_file>”, line 159, in run_with_timeout

rank0: return fn(*args, **kwargs)

rank0: File “<private_file>”, line 329, in batch_generate_response

rank0: rag_inputs = self.prepare_rag_enhanced_inputs(

rank0: File “<private_file>”, line 219, in prepare_rag_enhanced_inputs

rank0: results = self.search_pipeline(<sensitive_data>, k=<sensitive_data>)

rank0: File “<private_file>”, line 1379, in call

rank0: return self.run_single(inputs, preprocess_params, forward_params, postprocess_params)

rank0: File “<private_file>”, line 1386, in run_single

rank0: model_outputs = self.forward(model_inputs, **forward_params)

rank0: File “<private_file>”, line 1286, in forward

rank0: model_outputs = self._forward(model_inputs, **forward_params)

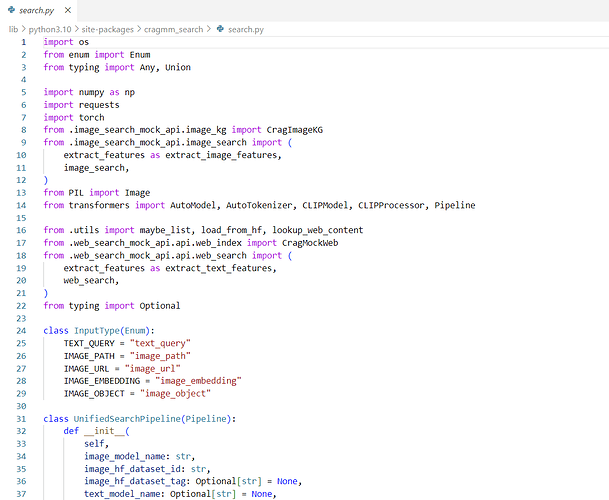

rank0: File “<private_file>”, line 143, in _forward

rank0: raise ValueError(

rank0: ValueError: <redacted_value>