How was the dataset prepared?

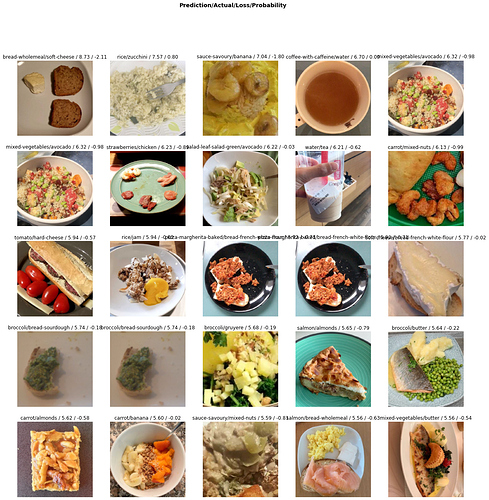

The dataset has some pretty ambiguous images, for example see image 1 in third row and image 4 in last row.

This mixed with the fact that train-test split has different class balances makes problem ill posed and so F1 very unstable metric…

Note for future iterations: it would be to use top-k accuracy or recall metric to give some leeway, the problem isn’t that hard, but your statement made it so.

Hi @jakub_bartczuk,

Thanks for your observations and feedback. The images in this dataset were collected from real users who track their daily food habits by taking pictures of food items they consume, and hence reflects the actual distribution of the data in the wild !

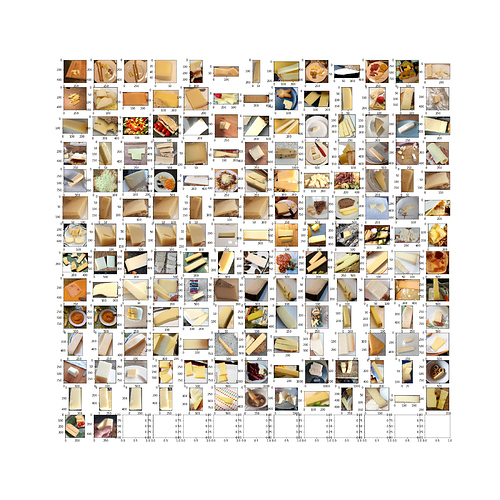

As you would expect there would be a few overlapping food items as well as some level of wrongly annotated data. Having gone through the data myself, there is a significant portion of images that can be classified as just one thing. There definitely are some exceptions as you have found. For example here is a visualization of all the images in the hard cheese class.

Some of the images have multiple food items, and hence as pointed out by you, there is significant merit in treating this as a multi-label classification problem. As a matter of fact, we plan to release a much larger version of this dataset with individual segmentations of all the different food items in each of the images, modelled as an image segmentation task. The said task would be a research challenge and not a part of the educational Blitz related initiatives. But we assure you, the nuanced data distributions and class imbalances will continue to be well represented even in the larger dataset : because it is what the real world distribution is.

At the same time, as mentioned in the forums a few times, we wanted to come up with a simplified classification problem from the original dataset as an easy-to-get-started problem for many of the community members.

We appreciate your inputs and the points you raised around the problem formulation, and are sure all of them would be well addressed when the larger dataset is released at the end of this month.

As this iteration of the AIcrowd Blitz ends, we hope we will be successful in aggregating all the activity that happened around these starter problems, and hope that we will be able to have continued engagement from community members like yourself even in the research challenges that we will organize as extensions to these problems.

Regards

Shraddhaa